diff --git a/Software_Carbon_Efficiency_Rating(SCER)/CLA.md b/CLA.md

similarity index 100%

rename from Software_Carbon_Efficiency_Rating(SCER)/CLA.md

rename to CLA.md

diff --git a/README.md b/README.md

index 585aec4..f910dee 100644

--- a/README.md

+++ b/README.md

@@ -39,7 +39,7 @@ Carbon Efficiency: The amount of carbon dioxide equivalent (CO2e) emitted per US

### Meeting Details

- Bi-weekly on a Wednesday @ 09:00 PT / 1700 BST

-- [Become a member](https://wiki.greensoftware.foundation/orientation/signup)

+- [Become a member](https://wiki.greensoftware.foundation/register)

### Software Carbon Efficiency Rating

- [Current baseline](https://github.com/Green-Software-Foundation/scer)

diff --git a/Resources/events.md b/Resources/events.md

deleted file mode 100644

index fe97af1..0000000

--- a/Resources/events.md

+++ /dev/null

@@ -1,4 +0,0 @@

-Upcoming events for participation:

-- [Open Source Summit Japan](https://events.linuxfoundation.org/open-source-summit-japan/)

-- [Open Source Summit Asia](https://events.linuxfoundation.org/kubecon-cloudnativecon-open-source-summit-ai-dev-china/)

-- [Gosim China 2024](https://china2024.gosim.org/)

diff --git a/Resources/slide_decks.md b/Resources/slide_decks.md

deleted file mode 100644

index 8076c3e..0000000

--- a/Resources/slide_decks.md

+++ /dev/null

@@ -1,13 +0,0 @@

-List SCER presentation and resources here:

-- [Advancing Responsible AI: Unveiling the Software Carbon Efficiency Rating (SCER) for AI Models (PDF and Youtube Video)](https://aideveu24.sched.com/event/1c1lg?iframe=no) at LF AI_dev: Open Source GenAI & ML Summit Europe 2024

-

-

-

-

-

-

-References:

-- NutriScore: https://en.wikipedia.org/wiki/Nutri-Score

-- EnergyGuide: https://en.wikipedia.org/wiki/EnergyGuide

-- EnergyStar: https://www.energystar.gov/

-- ISO 9000, Quality Management Standard Framework: https://www.iso.org/standards/popular/iso-9000-family

diff --git a/SCER_Certification_Program/Reporting.md b/SCER_Certification_Program/Reporting.md

deleted file mode 100644

index c9acbe8..0000000

--- a/SCER_Certification_Program/Reporting.md

+++ /dev/null

@@ -1,3 +0,0 @@

-### Report SCER Usage Here (Organization Name, Conformance Levels, References):

-Example:

-- GSF, Level 2, https://greensoftware.foundation/

diff --git a/SCER_Certification_Program/SCER_Certification_Prorgram_for_LLMs.md b/SCER_Certification_Program/SCER_Certification_Prorgram_for_LLMs.md

deleted file mode 100644

index 3e82ed2..0000000

--- a/SCER_Certification_Program/SCER_Certification_Prorgram_for_LLMs.md

+++ /dev/null

@@ -1,50 +0,0 @@

-### SCER Certification Program for LLMs

-

-**Introduction:**

-The SCER (Software Carbon Efficiency Rating) certification program for Large Language Models (LLMs) aims to promote transparency, accountability, and environmental responsibility in the development and deployment of AI technologies. The program is inspired by successful initiatives like [NutriScore](https://www.santepubliquefrance.fr/en/nutri-score), [Energy Star](https://www.energystar.gov/), and [EnergyGuide](https://consumer.ftc.gov/articles/how-use-energyguide-label-shop-home-appliances), providing a clear and standardized framework for assessing and communicating the carbon efficiency of LLMs.

-

-**Certification Levels:**

-The SCER certification program has two levels:

-

-**Level 1. SCER Process Conformance Certification**

- - **Description:** This certification level ensures that organizations adhere to the SCER standardized framework and complete the four-step process.

- - **Instructions:**

- 1. Complete the four-step process outlined by [SCER for LLMs](https://github.com/chrisxie-fw/scer/blob/Dev/use_cases/SCER_FOR_LLM/SCER_For_LLM_Specification.md):

- - **Step 1:** LLMs Categorization

- - **Step 2:** Carbon Benchmarking

- - **Step 3:** Rating

- - **Step 4:** Visuals and Labelling

- 2. Report usage and conformance to the [SCER Working Group (WG)](Reporting.md) within the Green Software Foundation (GSF).

- 3. Use the SCER Process Conformance Label

- - **Outcome:** Organizations meeting these criteria are granted the right to display the SCER Process Conformance label on their products or services.

- - **Label:**

-

-  -

- (Experimental label image)

-

-**Level 2. SCER Rating Certification**

- - **Description:** This certification level assesses the actual carbon efficiency rating of the LLMs and provides a rating based on the SCER framework. Achieving this certification also implies compliance with the Level 1 SCER Process Conformance Certification.

- - **Instructions:**

- 1. Use the SCER for LLMs process to obtain a carbon efficiency rating.

- 2. Display the rating on the relevant products and services.

- 3. Report usage and conformance to the [SCER WG in GSF](Reporting.md).

- - **Outcome:** Organizations meeting these criteria are granted the right to display the SCER Rating label on their products or services.

- - **Label:**

-

-

-

- (Experimental label image)

-

-**Level 2. SCER Rating Certification**

- - **Description:** This certification level assesses the actual carbon efficiency rating of the LLMs and provides a rating based on the SCER framework. Achieving this certification also implies compliance with the Level 1 SCER Process Conformance Certification.

- - **Instructions:**

- 1. Use the SCER for LLMs process to obtain a carbon efficiency rating.

- 2. Display the rating on the relevant products and services.

- 3. Report usage and conformance to the [SCER WG in GSF](Reporting.md).

- - **Outcome:** Organizations meeting these criteria are granted the right to display the SCER Rating label on their products or services.

- - **Label:**

-

-  -

- (Experimental label image)

-

-**Reporting Requirements:**

-- Both certification levels require organizations to report their usage and conformance to the SCER WG in the GSF.

-- Organizations are encouraged to voluntarily report any changes when their conformance status is altered.

-

-**Standards and Compliance:**

-- SCER standards are periodically reviewed and updated to reflect technological advancements and market changes, ensuring the label remains a mark of best practices for high carbon efficiency.

-- The SCER WG monitors compliance through ongoing testing and market surveillance.

-- Products or services found to be non-compliant can have their certification revoked.

-

-**Summary:**

-

-The SCER certification program for LLMs is a comprehensive initiative designed to encourage sustainable practices in AI development. By providing clear guidelines and rigorous standards, SCER aims to reduce the carbon footprint of LLMs and promote environmental responsibility within the AI industry.

diff --git a/SCER_Certification_Program/images/SCER_Label.webp b/SCER_Certification_Program/images/SCER_Label.webp

deleted file mode 100644

index fe10132..0000000

Binary files a/SCER_Certification_Program/images/SCER_Label.webp and /dev/null differ

diff --git a/SCER_Certification_Program/images/SCER_Rating_Label.webp b/SCER_Certification_Program/images/SCER_Rating_Label.webp

deleted file mode 100644

index 5947732..0000000

Binary files a/SCER_Certification_Program/images/SCER_Rating_Label.webp and /dev/null differ

diff --git a/SCER_Certification_Program/images/scer_process_conformance.png b/SCER_Certification_Program/images/scer_process_conformance.png

deleted file mode 100644

index 30bad43..0000000

Binary files a/SCER_Certification_Program/images/scer_process_conformance.png and /dev/null differ

diff --git a/SCER_Certification_Program/images/scer_rating_conformance.png b/SCER_Certification_Program/images/scer_rating_conformance.png

deleted file mode 100644

index a16349a..0000000

Binary files a/SCER_Certification_Program/images/scer_rating_conformance.png and /dev/null differ

diff --git a/SPEC.md b/SPEC.md

new file mode 100644

index 0000000..1011226

--- /dev/null

+++ b/SPEC.md

@@ -0,0 +1,195 @@

+---

+version: 0.0.1

+---

+# Software Carbon Efficiency Rating (SCER) Specification

+

+## Introduction

+

+

+In the context of global digital transformation, the role of software in contributing to carbon emissions has become increasingly significant. This necessitates the development of standardized methodologies for assessing the environmental impact of software systems.

+

+Rationale:

+

+- The Rising Carbon Footprint of Software: The digitization of nearly every aspect of modern life has led to a surge in demand for software solutions, subsequently increasing the energy consumption and carbon emissions of the IT sector.

+- The Need for a Unified Approach: Currently, the lack of a standardized system for labeling the carbon efficiency of software products hinders effective management and reduction of carbon footprints across the industry.

+

+This document aims to establish the Software Carbon Efficiency Rating (SCER) Specification, a standardized framework for labeling the carbon efficiency of software systems. The SCER Specification aims to serve as a model for labeling software products according to their Software Carbon Intensity (SCI), and it is adaptable for different software categories.

+

+

+## Scope

+

+This specification provides a framework for displaying, calculating, and verifying software carbon efficiency labels. By adhering to these requirements, software developers and vendors can offer consumers a transparent and trustworthy method for assessing the environmental impact of software products.

+

+It outlines the label format, presentation guidelines, display requirements, computation methodology used to determine the software's carbon efficiency, and the requirements for providing supporting evidence to demonstrate the accuracy of the carbon efficiency claims.

+

+This specification is intended for a broad audience involved in the creation, deployment, or use of software systems, including but not limited to:

+- Software developers

+- IT professionals

+- Policy-makers

+- Business leaders

+

+

+## Normative references

+

+ISO/IEC 21031:2024

+Information technology — Software Carbon Intensity (SCI) specification.

+

+ISO/IEC 40500

+Information technology — W3C Web Content Accessibility Guidelines (WCAG) 2.0.

+

+ISO/IEC 18004:2024

+Information technology — Automatic identification and data capture techniques — QR code bar code symbology specification.

+

+## Terms and definitions

+

+For the purposes of this document, the following terms and definitions apply.

+

+ISO and IEC maintain terminological databases for use in standardization at the following addresses:

+- ISO Online browsing platform: available at https://www.iso.org/obp

+- IEC Electropedia: available at http://www.electropedia.org/

+

+> [!NOTE]

+> TODO: Update these definitions

+

+- Software Application: TBD

+- Software Carbon Efficiency: TBD

+- Software Carbon Intensity: TBD

+- Carbon: TBD

+- Functional Unit: TBD (From SCI)

+- Manifest File: TBD

+- QR Code: TBD

+

+

+The following abbreviations are used throughout this specification:

+

+> [!NOTE]

+> TODO: Update these abbreviations

+- SCI

+- SCER

+

+> [!NOTE]

+> For ease of ref, removed in final spec.

+> - Requirements – shall, shall not

+> - Recommendations – should, should not

+> - Permission – may, need not

+> - Possibility and capability – can, cannot

+

+## Core Requirements

+

+### Ease of understanding

+A label that is hard to understand or requires expertise unavailable to most software consumers would defeat the purpose of adding transparency and clarity.

+

+SCER labels shall be uncluttered and have a clear and simple design that Shall be easily understood.

+

+### Ease of Verification

+The SCER label Shall make it easy for a consumer of a software application to verify any claims made.

+

+Consumers should have all the information they need to verify any claims made on the label and to ensure the underlying calculation methodology or any related specification has been followed accurately.

+

+### Accessible

+The SCER label and format shall be accessible to and meet accessibility specifications.

+

+### Language

+The SCER label Should be written using the English language and alphabet.

+

+## Calculation Methodology

+

+The SCI shall be used as the calculation methodology for the SCER label.

+

+Any computation of a SCI score for the SCER label SHALL adhere to all requirements of the SCI specification.

+

+> Note: SCI is a Software Carbon Efficiency Specification computed as an "Carbon per Functional Unit" of a software product. For example, Carbon per Prompt for a Large Language Model.

+

+## Presentation Guidelines

+

+Components of a SCER Label

+

+### SCI Score:

+The presentation of the SCI score Shall follow this template

+

+`[Decimal Value] gCO2eq per [Functional Unit]`

+

+- Where `[Decimal Value]` is the SCI score itself

+- Where the common term `Carbon` Shall be used to represent the more technical term `CO2e` (Carbon Dioxide Equivalent)

+- The symbol `/` Shall Not be used in place of `Per`

+- Where `[Functional Unit]` is text describing the Functional Unit as defined in the SCI calculation for this software application

+

+### SCI Version

+The SCI version Should be visible, even in small sizes.

+

+The SCI version Shall describe which version of the SCI specification this SCER label complies with and have the following format:

+

+`[Short Name] [Version]`

+

+- Where `[Short Name]` is the abbreviated version of the SCI specification this SCER label is representing

+- Where `[Version]` is the official SCI specification version this label refers to.

+

+For example:

+- `SCI 1.1`

+- `SCI AI 1.0`

+

+### QR Code

+The QR Code Shall be a URL represented as a QR code as per ISO/IEC 18004:2024

+

+The URL Shall point to a publicly accessible website where you can download a manifest file that meets the requirements in the *Supporting Evidence Section*

+

+The URL Shall Not require a login, and it Shall be publicly accessible by anonymous users or non-human automated bots/scripts.

+

+## Display Requirements

+

+The SCER Label Shall conform to this layout:

+

+> [!NOTE]

+> This is a WIP, the current design doesn't meet size flexibilty requirements.

+

+

+

+- Color: ??

+- Size: ??

+- Placement: ??

+- Font: ??

+- Example: ??

+

+## Supporting Evidence

+Per the presentation guidelines, the SCER label will link to a manifest file that provides evidence to support any claims made on the label.

+

+The manifest file Shall meet three criteria to pass as supporting evidence.

+

+### Conformance

+Evidence that the underlying SCI requirements have been met in the computation of the SCI score.

+

+The Manifest File Shall clearly describe the Software Boundary per the SCI specification.

+

+The Manifest File Shall follow the Impact Manifest Protocol Standard for communicating environmental impacts.

+

+The Manifest File should use granular data that aligns with SCI recommendations.

+

+### Correctness

+Correctness is confirming the numbers in the manifest file match the information on the SCER label.

+

+The Manifest File shall have an aggregate value for the SCI score that matches the reported score on the SCER label.

+

+The Manifest File shall have a Functional Unit that matches the reported Functional Unit on the SCER label.

+

+### Verification

+Verification is the act of confirming the evidence supports the claim.

+

+Verification of a SCER label Shall be possible using open source software and open data.

+

+Verification Shall be free for the end user and Shall Not require purchasing licenses for software or data or logging into external systems.

+

+If Verification requires access to data, that data Shall also be publicly available and free to use.

+

+

+### 4. Appendices

+

+Supporting documents, example calculations, and reporting templates.

+

+---

+

+### 5. References

+

+List of references used in the creation of the SCER Specification.

+

+---

+

diff --git a/Software_Carbon_Efficiency_Rating(SCER)/Discussions.md b/Software_Carbon_Efficiency_Rating(SCER)/Discussions.md

deleted file mode 100644

index 88f4b75..0000000

--- a/Software_Carbon_Efficiency_Rating(SCER)/Discussions.md

+++ /dev/null

@@ -1,49 +0,0 @@

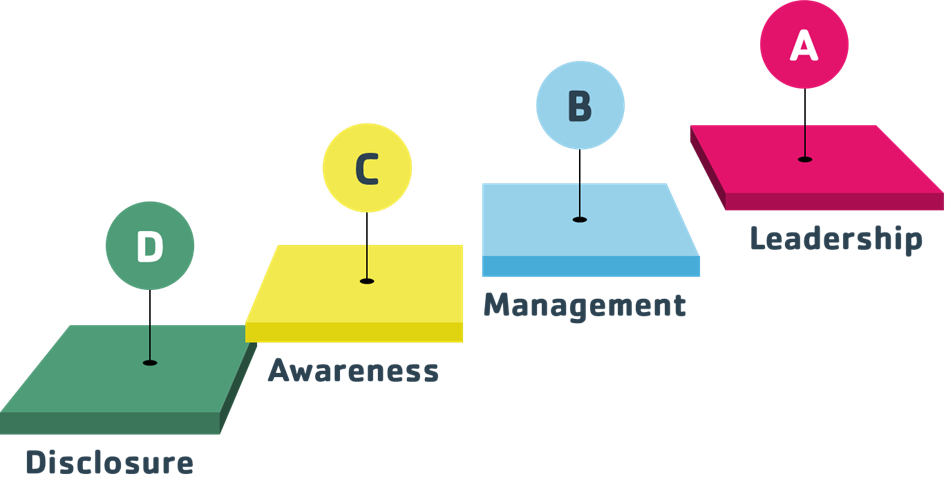

-## Discussions

-1. Different standard rating programs call for different rating scope, calculation, and algorithm, etc. Some represent the ratings using number scores (e.g. EnergyStar), some using alphabetic letters (NutriScore). Therefore, in order for SCER to be widely adopted, and be flexible enough to fit a wide range of use case scenarios, the design of the SCER spec shall adopt the generic and modular approach for standardization, and enable domain experts from different industry verticals to define category-specific SCER standard, based on the generic SCER spec.

-2. SCI addressed the score part, but lacking **relative rating specification**. Therefore, SCER is supplementing SCI spec to provide an easy-to-understand rating mechanism. SCER also defines the standard specification for software categorization, benchmark definition, and rating definition. These are used as guidelines for users of SCER spec to create their own category-specific SCER specifications.

-2. With respect to the use of SCER spec, it involves data collection and publication of the ratings for various types or categories of software. For instance, refer to [MLPerf](https://mlcommons.org/) and [Geekbench](https://www.geekbench.com/), both of which defined a standard set of workload, in the case of MLPerf, the workload is open sourced, in the case of Geekbench, the workload is close sourced. Both of them provide a standard set of workload and benchmarks. They also provide a means of data collection and intuitive result publication. What are the directions of SCER in this respect? Do we want to define the spec so that other people can use it to define their own category specific SCER spec, or do we create a SCER plaform that's more like MLPerf or Geekbench where benchmarks and data collection of workload are crowd-sourced, and the results are published on a central SCER platform?

-1. House-keeping item: Confirming CLA (Contributor License Agreements) handling:

- - GitHub Integration: There are tools like CLA assistant that integrate with GitHub to manage CLA confirmations. When a contributor submits a pull request, they are prompted to agree to the CLA with a single click if they haven't done so already for that project.

- - Git Signed-off-by Statement: In certain projects, especially those using Git, a contributor might use the signed-off-by statement in their commits as an indication that they agree to the terms of the CLA, though this method is less formal and typically used in conjunction with another method.

-

-

-## References

-This References section demonstrates cases where different rating, labelling systems are used for different use cases and industry verticals.

-

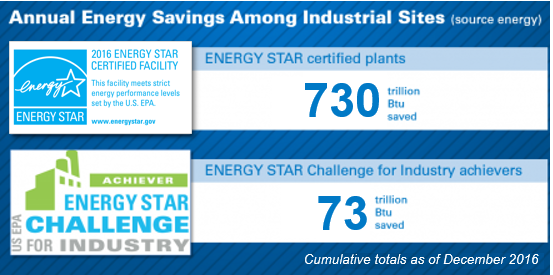

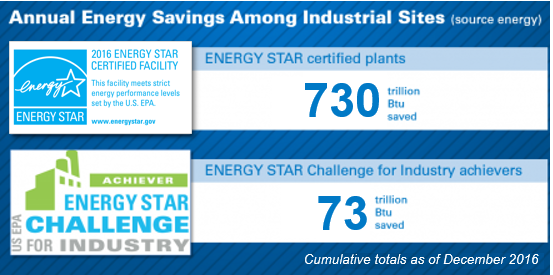

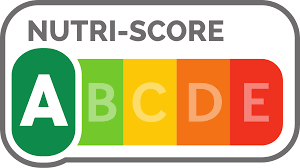

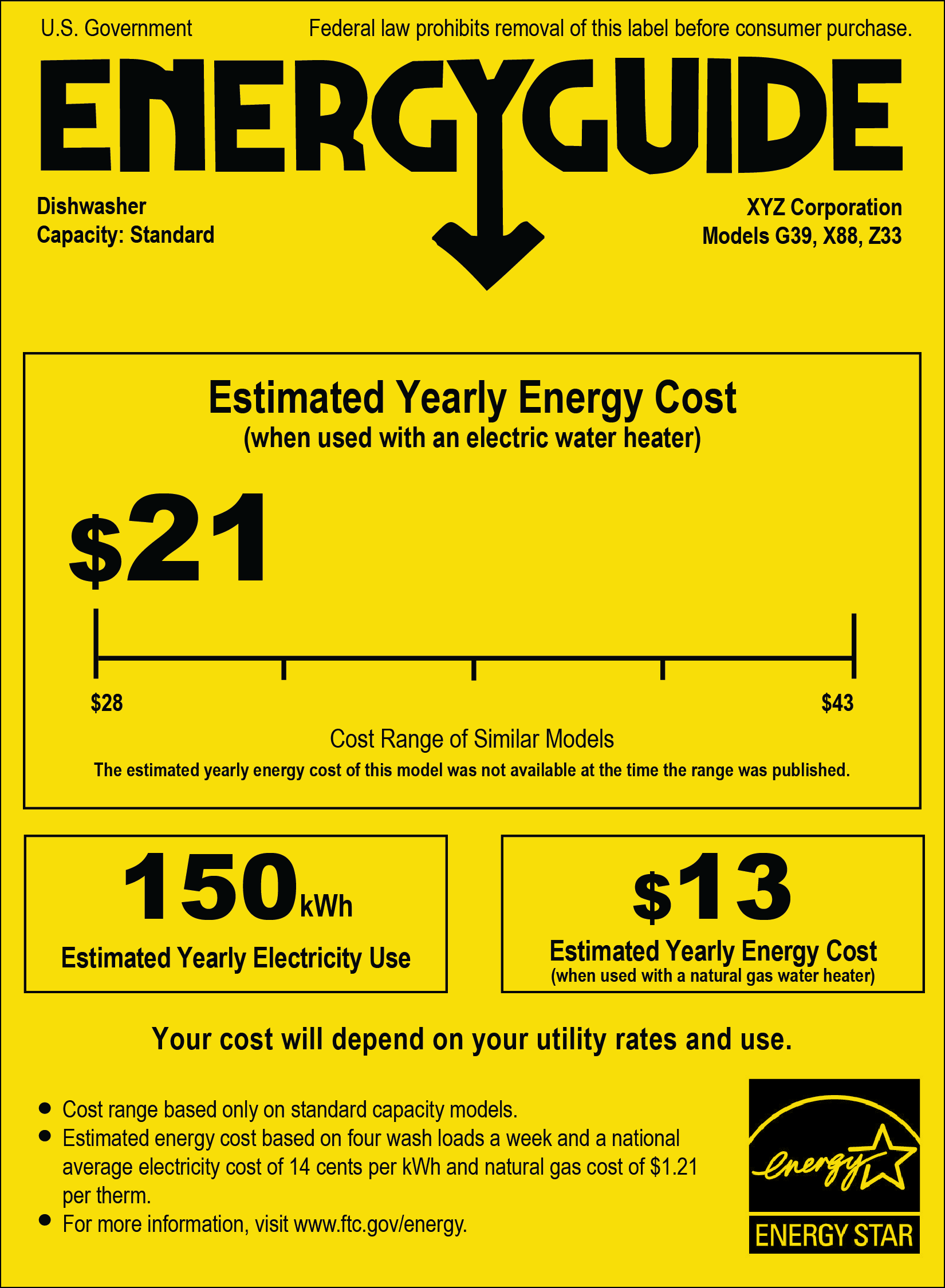

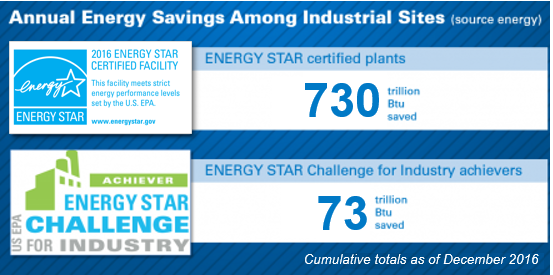

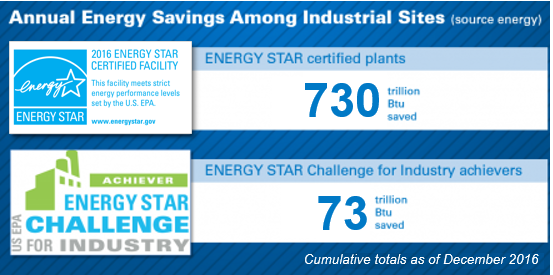

-### EnergyStar:

-

-To earn the ENERGY STAR, eligible commercial buildings must earn an 1–100 ENERGY STAR score of 75 or higher—indicating that they operate more efficiently than at least 75% of similar buildings nationwide. Before applying, a building's application must be verified by a Professional Engineer or Registered Architect.

-

-

-

-

-

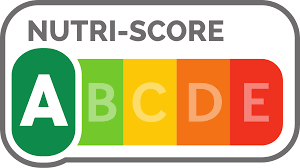

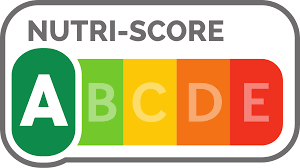

-### Nutri-Score:

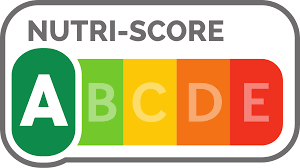

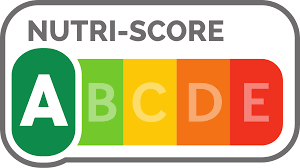

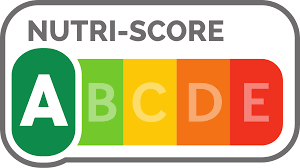

-Nutri-Score is a front-of-package nutritional label that converts the nutritional value of food and beverages into a simple overall score. It is based on a scale of five colors and letters:

-- A: Green to represent the best nutritional quality

-- B: Light green, meaning it's still a favorable choice

-- C: Yellow, a balanced choice

-- D: Orange, less favorable

-- E: Dark orange to show it is the lowest

-

-The Nutri-Score calculation pinpoints the nutritional value of a product based on the ingredients. It takes into account both positive points (fiber content, protein, vegetables, fruit, and nuts) and negative points (kilojoules, fat, saturated fatty acids, sugar, and salt).

-

-The Nutri-Score is calculated per 100g or 100ml. The goal of the Nutri-Score is to influence consumers at the point of purchase to choose food products with a better nutritional profile, and to incentivize food manufacturers to improve the nutritional quality of their products.

-

-

-

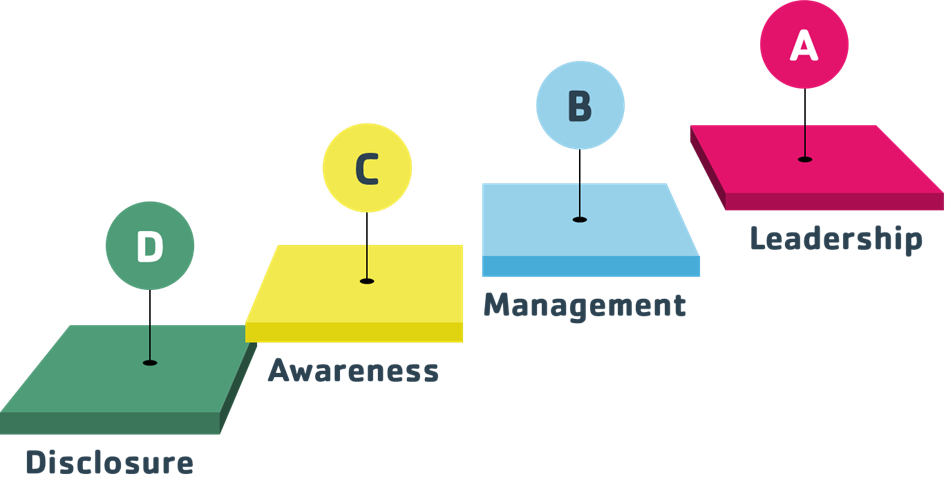

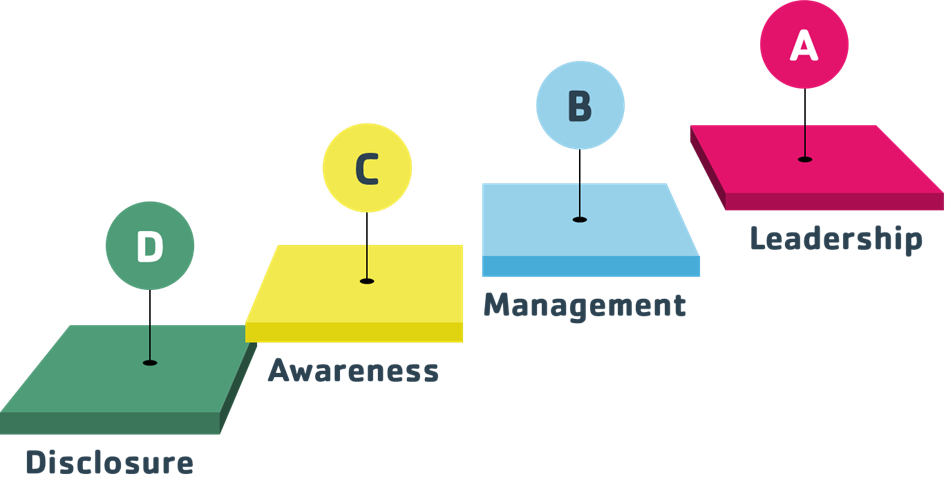

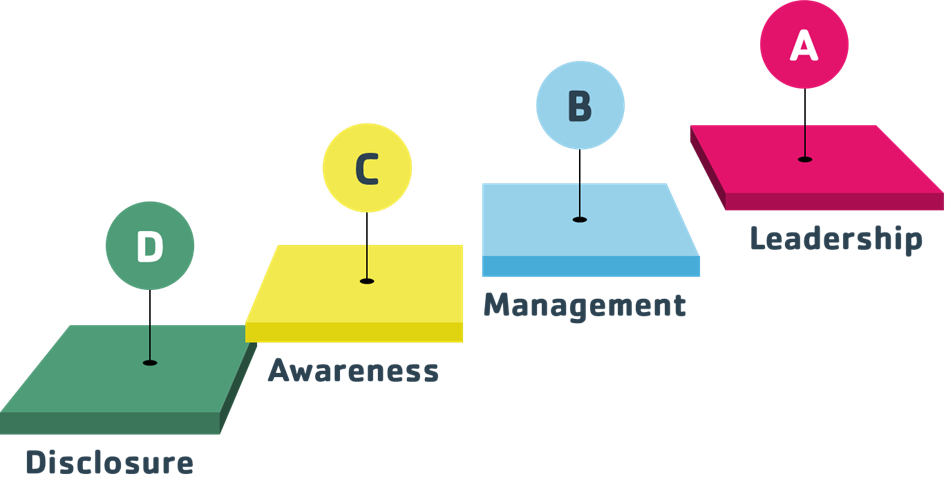

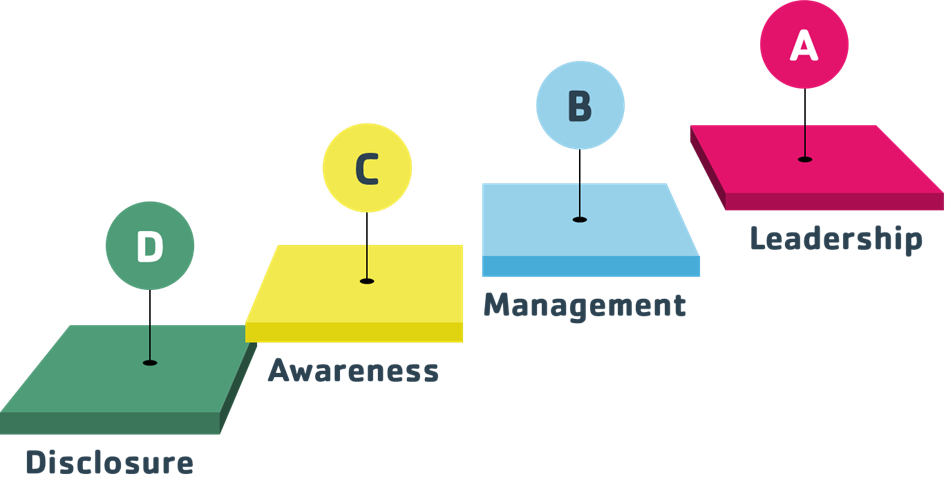

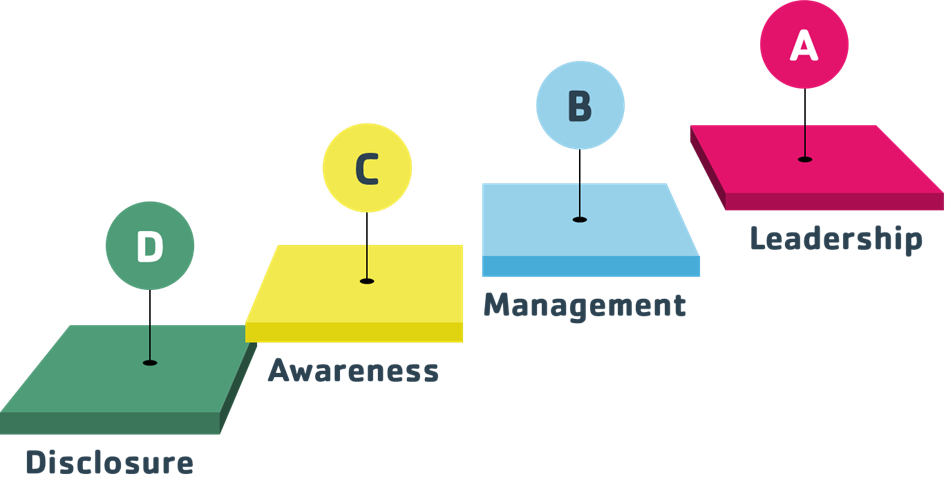

-### CDP:

-[CDP](https://www.cdp.net/en/info/about-us) (formerly Carbon Disclosure Project): A global disclosure system for companies, cities, states, and regions to manage their environmental impacts.

-- Data collection as a form of company disclosure: CDP provides guide that covers the key steps to disclose as a company including setting up a CDP account, responding to the CDP questionnaire(s), and receiving a CDP score.

-- A CDP score is a snapshot of a company’s environmental disclosure and performance. CDP's scoring methodology is fully aligned with regulatory boards and standards, and provides comparability in the market.

-

-

-

-### LEED

-LEED stands for Leadership in Energy and Environmental Design. It is the most widely used green building rating system in the world. LEED is an environmentally oriented building certification program run by the U.S. Green Building Council (USGBC).

-LEED provides a framework for healthy, efficient, and cost-saving green buildings. It aims to improve building and construction project performance across seven areas of environmental and human health.

-

-

-To achieve LEED certification, a project earns points by adhering to prerequisites and credits that address carbon, energy, water, waste, transportation, materials, health and indoor environmental quality. Projects go through a verification and review process by GBCI and are awarded points that correspond to a level of LEED certification: **Certified (40-49 points), Silver (50-59 points), Gold (60-79 points) and Platinum (80+ points)**.

-

\ No newline at end of file

diff --git a/Software_Carbon_Efficiency_Rating(SCER)/SCER for Database Server Software.md b/Software_Carbon_Efficiency_Rating(SCER)/SCER for Database Server Software.md

deleted file mode 100644

index 70d6846..0000000

--- a/Software_Carbon_Efficiency_Rating(SCER)/SCER for Database Server Software.md

+++ /dev/null

@@ -1,186 +0,0 @@

-# SCER Specification for Relational Database Server Software

-

-## Version 0.1

-

-### Abstract

-

-The Software Carbon Efficiency Rating (SCER) for Relational Database Server Software provides a framework for assessing the carbon efficiency of database management systems (DBMS) based on energy consumption and operational efficiency.

-

-### Table of Contents

-

-1. [Introduction](#1-introduction)

-2. [Objective](#2-objective)

-3. [Terminology](#3-terminology)

-4. [Scope](#4-scope)

-5. [Software Categorization](#5-software-categorization)

-6. [Benchmarking](#6-benchmarking)

-7. [Rating System](#7-rating-system)

-8. [Rating Calculation Algorithm](#8-rating-calculation-algorithm)

-9. [Compliance and Verification](#9-compliance-and-verification)

-10. [Future Directions](#10-future-directions)

-

----

-

-### 1. Introduction

-

-Database servers are integral to IT infrastructure with substantial energy footprints. Enhancing their carbon efficiency is vital for minimizing the environmental impact of data-centric operations worldwide.

-

-### 2. Objective

-

-To define and implement a standard that measures and rates the carbon efficiency of relational database server software, encouraging industry progression towards more sustainable practices.

-

-### 3. Terminology

-

-- **SCER**: Software Carbon Efficiency Rating

-- **DBMS**: Database Management System

-- **OPS/Watt-hour**: Operations Per Second per Watt

-- **Carbon Footprint**: The total CO2e emissions associated with the DBMS across its lifecycle.

-

-### 4. Scope

-

-This standard applies to relational database server software encompassing open-source and proprietary systems.

-

-### 5. Software Categorization

-

-Database server software is categorized based on:

-

-- Scale: small, medium, large

-- Use case: transactional, analytical, hybrid

-- Deployment model: on-premises, cloud-based, hybrid

-

-### 6. Benchmarking

-

-Benchmarking focuses on:

-

-- **OPS/Watt-hour**: Database operations executed per watt hour of power consumed.

-- **Transaction Efficiency**: Energy consumed per completed transaction.

-- **Query Optimization**: Energy cost of executing complex queries.

-

-### 7. Rating System

-

-SCER classifications:

-

-- **A**: Highly efficient operations (>X OPS/Watt-hour), advanced query optimization, low energy per transaction.

-- **B**: Moderately efficient ([Y-X] OPS/Watt-hour), standard query optimization, average energy per transaction.

-- **C**: Less efficient (X Streams/Watt-hour), high data transfer efficiency, and optimal user engagement.

-- **B**: Moderately efficient ([Y-X] Streams/Watt-hour), moderate data transfer efficiency, and user engagement.

-- **C**: Less efficient (

- **N**: Not similar

-

-- Relational Database Server Category Example:

-

- | Software Applications| Purpose and Functionality | Platform and Deployment |End User Base|

- | -------- | :---------:| :---------:|:---------:|

- | MiSQL | S | S |S |

- | PSQL | S | S |S |

- | SQLite | S | **N** |S |

-

- In this example, SQLite is a light weight database which is mostly used and deployed in mobile phone platforms that are significantly different from MiSQL and PostgresSQL, so SQLite should not belong in this category, and should not be used for SCER rating in this category.

-

-##### 2.1.2 Extended Components of a Software Categorization

-

-The following aspects may be extended in determining if the software application are in the same category:

-

-- **Type of License:** Differentiating software based on open-source or proprietary status and licensing models.

- - **Open Source vs. Proprietary:** Linux kernel as open-source software vs. Microsoft Windows as proprietary software.

- - **Licensing Model:** Subscription-based model like Adobe Creative Cloud versus a one-time purchase software like Final Cut Pro.

- - **Example Categories:**

- - **Open Source:** Mozilla Firefox: Open-source web browser. LibreOffice: Open-source office suite.

- - **Proprietary:** Microsoft Office: Proprietary office suite. Adobe Acrobat: Proprietary PDF solution.

-

-- **Technical Complexity:** Evaluating the software's architecture and dependencies.

- - **Architecture:** Docker containers showcasing microservices architecture.

- - **Dependencies:** Node.js applications often depend on numerous packages from npm (node package manager).

- - **Example Categories:**

- - **Data Processing:** Hadoop: Framework for distributed storage and processing of large data sets. Elasticsearch: Search and analytics engine for all types of data.

- - **Content Management Systems:** Joomla: Content management system for web content. Drupal: Content management system for complex websites.

-

-- **Integration and Ecosystem:** Assessing compatibility and ecosystem integration.

- - **Compatibility:** Slack integrates with numerous other productivity tools like Trello, Asana, and Google Drive.

- - **Ecosystem:** Apple’s iOS apps that are part of the broader Apple ecosystem, designed to work seamlessly with other Apple devices and services.

- - **Example Categories:**

- - **Customer Relationship Management (CRM):** Salesforce: CRM solution with extensive integration capabilities. HubSpot CRM: Inbound marketing, sales, and service software with integration features.

- - **Development Tools:** JetBrains IntelliJ IDEA: Integrated development environment for software development. Microsoft Visual Studio: Comprehensive development environment with extensive integrations.

-

-- **Regulatory and Compliance Considerations:** Ensuring compliance with applicable regulations and standards.

- - **Healthcare Software:** HIPAA-compliant patient management systems like Cerner.

- - **Financial Software:** SEC-compliant trading platforms like TD Ameritrade.

- - **Example Categories:**

- - **Financial Services:** Thomson Reuters Eikon: Financial data and analytics tool. FactSet: Financial data and software for investment professionals.

- - **Telecommunications:** Amdocs: Software and services for communications, media, and financial services providers. Ericsson: Network software for telecom operators.

-

-- **Feedback and Market Recognition:** Using market recognition and user feedback for category validation.

- - **User Reviews:** Yelp for restaurant and business reviews.

- - **Awards and Recognition:** Autodesk AutoCAD receiving awards for its CAD design software capabilities.

- - **Example Categories:**

- - **Web Browsers:** Google Chrome: Highly rated for speed and integration with Google services. Mozilla Firefox: Praised for privacy features and open-source development.

- - **E-Commerce Platforms:** Shopify: Widely recognized platform for creating online stores. Magento: Renowned open-source e-commerce platform.

-

-- **Industry Vertical:** Classifying software according to the relevant industry vertical.

- - **Healthcare:** Epic Systems for electronic health records management.

- - **Finance:** QuickBooks for accounting in small to mid-sized businesses.

- - **Example Categories:**

- - **Healthcare:** McKesson: Medical supplies ordering and healthcare information technology. Allscripts: Provider of electronic record, practice management, and other clinical solutions.

- - **Education:** Blackboard: Learning management system for education providers. Canvas: Web-based learning management system for educational institutions.

-

-- **Consistent Review and Update:**

- - **Periodic Review:** Google Chrome, which releases updates frequently to reflect the latest web standards and security practices.

- - **Benchmark against Peers:** Comparing Microsoft Office 365 to other productivity suites like Google Workspace for feature set and market position.

- - **Example Categories:**

- - **Operating Systems:** Windows 10: Regular feature updates and security patches. macOS: Annual updates with feature enhancements and security improvements.

- - **Security Software:** Symantec Norton: Consistent updates for virus definitions and security features. McAfee: Frequent updates to maintain security and performance standards.

-

-- **Size of the Software:** Many softwares are comparatively very big in size and this may get multiplied with each install by different users.

- - **Software Size itself:** Software size and depending on the type of installation required for the application, it's size can have an huge impact on the carbon emissions generated by the software.

- - **Dependencies:** Node.js or Angular applications depend on numerous packages and that also needs to be downloaded with each installation.

- - **Examples:**

- - **Desktop/Mobile Applications:** Microsoft Office 365, Visual Studio, OS, etc.

- - **Web Applications:** Microsoft 365 Apps, Open Cloud Architecture, Virtual work environment, etc.

-

-Note:

-1. Should SaaS (Software s a Service) be part of the software categorization? It most likely is part of the software categorization for the SCER purpose, such as music streaming service. It seems that SCER really means software *induced* carbon efficiency rating - meaning, the carbon efficiencies due to running of the software application, regardless if the software is run as a service, or run on a desktop.

-2. Should these aspects of categorizing software be OR or AND relationship? Does all software applications belonging to the same category have to have the same answer for each aspect? For example, should microsoft office and libre office belong to the same office suite software category for the purpose of SCER rating, even though one is proprietary, the other is open source?

-3. Are these too heavy? Any other considerations missing?

-

----

-

-#### 2.2 Benchmarking Specification

-

-This section of the SCER Specification provides a detailed description of the benchmarking process for measuring the carbon efficiency of software applications. It includes the selection of appropriate metrics, the definition of the benchmarking workload, the necessary test environment setup, and the methodology for executing the benchmarks.

-

-##### 2.2.1 Definition of Benchmarks

-

-Benchmarks serve as the comparative performance standards against which software applications are evaluated for their [Software Carbon Intensity (SCI)](https://github.com/Green-Software-Foundation/sci/blob/main/Software_Carbon_Intensity/Software_Carbon_Intensity_Specification.md) scores or [Social Cost of Carbon (SCC)](https://www.brookings.edu/articles/what-is-the-social-cost-of-carbon/#:~:text=The%20social%20cost%20of%20carbon%20(SCC)%20is%20an%20estimate%20of,a%20ton%20of%20carbon%20emissions.) dollars. The default measurement of benchmarks is in SCI scores.

-

-##### 2.2.2 Unit of Software Function (USF)

-

-The "Unit of Software Function" or USF refers to the most commonly used function of the software, such as:

-

-- **Read/Write Operations** for database software

-- **Rendering a Frame** for graphical software or video games

-- **Handling a Web Request** for web servers

-- **Processing a Data Block** for data processing software

-

-##### 2.2.3 Standardized Tests or Benchmarking Workload

-

-For each category of software, a standardized test can be developed to measure the functional utility of the software, which is then used to calculate its SCI score. The tests will simulate the most commonly used function of the software.

-

-The workload used for benchmarking should be representative of real-world scenarios to ensure the results are relevant and actionable.

-

-- **Workload Profiles**: Detailed descriptions of the different types of workload the software will be subjected to during the benchmarking process.

-- **Workload Metrics**: The quantitative measures of each workload profile, such as the number of simultaneous users, data size, and operation mix.

-

-Example Workload Profiles:

-- For a video streaming service, the workload might simulate streaming at various resolutions.

-- For a customer relationship management (CRM) system, the workload could involve a mix of database reads/writes, report generations, and user queries.

-

-##### 2.2.4 SCI Score Formula

-

-The SCI Score for a software application will be calculated as follows:

-

-$$\ SCI\ Score = \frac{CO2e\ emissions}{USF} \$$

-

-Where CO2e emissions are measured in kilograms and USF is determined by the benchmark test.

-

-Default measurement is in SCI but other metrics can be used to base the SCER rating, such as kWh, SCC, SCI, CO2e, etc.

-

-##### 2.2.5 Test Environment

-

-The test environment must be standardized to minimize variability in the benchmark results.

-

-- **Hardware Specifications**: A description of the physical or virtual hardware on which the software will be tested, including processor, memory, storage, and networking details.

-- **Software Configuration**: The operating system, middleware, and any other software stack components should be specified and standardized.

-

-Example Test Environments:

-- A specified cloud instance type with a defined operating system and resource allocation.

-- A physical server with controlled ambient temperature and power supply to ensure consistent results.

-

-##### 2.2.6 Test Methodology

-

-A detailed, repeatable method for conducting the benchmarks.

-

-- **Setup Instructions**: Step-by-step guidance to prepare the test environment, including installation and configuration of the software and tools.

-- **Execution Steps**: A sequential list of actions to perform the benchmark, including starting the software, initiating the workload, monitoring performance, and recording results.

-- **Result Collection and Analysis**: Guidelines for gathering the data, ensuring its integrity, and analyzing it to derive meaningful insights.

-

-Example Test Methodology:

-- For a web application, steps might include setting up a load generator, executing predetermined tasks, and measuring response times and energy consumption.

-- For a data processing application, it might involve running specific data sets through the software and measuring the time and energy required for processing.

-

-Each of these components should be defined with sufficient detail to enable consistent replication of the benchmarking process across different environments and software versions. This standardization is crucial for ensuring that the SCER ratings are reliable and comparable across different software applications.

-

-In summary, the following table can serve as a template or checklist when defining benchmarks:

-

-| Benchmark Measurement| Benchmark Workload | Test Environment |Test Methodology|

-| -------- | ---------| ---------|---------|

-| kWhSCCSCI (default) CO2e, or Other | Unit of Software Function (USF) Number of times to execute USF | Hardware specificationsSoftware Configuration |Setup InstructionsExecution StepsResult Collection and Analysis |

-

----

-*Benchmark measurement shall be carbon related, by default is in SCI scores. However, if the benchmarking is done in a controlled environment, all other variables related to carbon emission are being equal, then benchmarking can be measured in energy consumed (e.g. kWh) rather than in SCI.*

-

-#### 2.3 Rating Specification

-##### 2.3.1 **Rating Components:**

-The common components of a rating specification includes the following:

-1. Rating Scale: Define the rating scale and the criteria for each rating level.

-2. Evaluation Methodology: Detail the evaluation methodology for converting benchmark results to ratings.

-3. Reporting and Disclosure: Standardized format for rating disclosure.

-

-##### 2.3.2 **Rating Algorithm:**

-To compute the SCER (Software Carbon Efficiency Rating) based on SCI Score performance in relation to peer software within the same category.

-

-1. **Data Collection**: Gather SCI Scores from multiple software applications within the same category.

-2. **Normalization**: Normalize SCI Scores to create a common scale.

-3. **Ranking**: Rank software based on normalized SCI Scores.

-4. **Percentile Calculation**: Calculate the percentile position for each software application:

-

-$$\ Percentile\ Position = \left( \frac{Rank - 1}{Total\ Number\ of\ Submissions\ -\ 1} \right) \times 100 \$$

-

-5. **Rating Assignment**: Based on the percentile position, assign a rating according to the pre-defined scale. A default rating is A to C.

-

-##### 2.3.3 **Rating Example**

-Here is a SCER rating example, where the SCI scores of 5 software applications in the same category are collected. Lower SCI (Software Carbon Intensity) scores indicate higher software carbon efficiency. The rating scale includes labels A through C:

-

-- **A**: Exceptionally efficient (percentile position above the 90th percentile).

-- **B**: Above average efficiency (percentile position from the 60th to 90th percentile).

-- **C**: Average to inefficient (percentile position below the 60th percentile).

-

-**Collected SCI Scores:**

-

-- Software 1: SCI Score of 100

-- Software 2: SCI Score of 150

-- Software 3: SCI Score of 160

-- Software 4: SCI Score of 200

-- Software 5: SCI Score of 250

-

-**Rating Assignment Process:**

-

-###### Step 1: Data Collection

-

-SCI Scores for five software applications have been collected.

-

-###### Step 2: Ranking

-

-The software applications are ranked by SCI Score, from highest to lowest:

-

-1. Software 5: 250

-2. Software 4: 200

-3. Software 3: 160

-2. Software 2: 150

-1. Software 1: 100

-

-###### Step 3: Percentile Calculation

-

-Percentile positions are calculated using the formula:

-

-$$\ Percentile\ Position = \left( \frac{Rank - 1}{Total\ Number\ of\ Submissions\ -\ 1} \right) \times 100 \$$

-

-- Software 5: 0%

-- Software 4: 25%

-- Software 3: 50%

-- Software 2: 75%

-- Software 1: 100%

-

-###### Step 4: Rating Assignment

-

-Using the rating scale:

-

-- **A**: Above 90%

-- **B**: 60% to 90%

-- **C**: Below 60%

-

-###### Final Ratings by Efficiency

-

-- Software 1: **A**

-- Software 2: **B**

-- Software 3: **C**

-- Software 4: **C**

-- Software 5: **C**

-

-

-The final ratings reflect the carbon efficiency of the software applications, with Software 3-5 being average to inefficient, Software 2 above average, and Software 1 being exceptionally efficient.

-

-In summary, the following table can serve as a template or checklist when rating:

-

-| Rating Scale | Rating Algorithm | Reporting and Disclosure |

-| -------- | ---------| ---------|

-| e.g. A - C, or A, A+, A++, or AAA, etc. Default is A (>90%), B (60-89%),C (< 60%) |e.g. getting data, calculate mean, average etc. | e.g. rating assignment and reporting |

----

-

-

-#### 2.4 SCER Rating Visualization and Labeling

-

-

-

- (Experimental label image)

-

-**Reporting Requirements:**

-- Both certification levels require organizations to report their usage and conformance to the SCER WG in the GSF.

-- Organizations are encouraged to voluntarily report any changes when their conformance status is altered.

-

-**Standards and Compliance:**

-- SCER standards are periodically reviewed and updated to reflect technological advancements and market changes, ensuring the label remains a mark of best practices for high carbon efficiency.

-- The SCER WG monitors compliance through ongoing testing and market surveillance.

-- Products or services found to be non-compliant can have their certification revoked.

-

-**Summary:**

-

-The SCER certification program for LLMs is a comprehensive initiative designed to encourage sustainable practices in AI development. By providing clear guidelines and rigorous standards, SCER aims to reduce the carbon footprint of LLMs and promote environmental responsibility within the AI industry.

diff --git a/SCER_Certification_Program/images/SCER_Label.webp b/SCER_Certification_Program/images/SCER_Label.webp

deleted file mode 100644

index fe10132..0000000

Binary files a/SCER_Certification_Program/images/SCER_Label.webp and /dev/null differ

diff --git a/SCER_Certification_Program/images/SCER_Rating_Label.webp b/SCER_Certification_Program/images/SCER_Rating_Label.webp

deleted file mode 100644

index 5947732..0000000

Binary files a/SCER_Certification_Program/images/SCER_Rating_Label.webp and /dev/null differ

diff --git a/SCER_Certification_Program/images/scer_process_conformance.png b/SCER_Certification_Program/images/scer_process_conformance.png

deleted file mode 100644

index 30bad43..0000000

Binary files a/SCER_Certification_Program/images/scer_process_conformance.png and /dev/null differ

diff --git a/SCER_Certification_Program/images/scer_rating_conformance.png b/SCER_Certification_Program/images/scer_rating_conformance.png

deleted file mode 100644

index a16349a..0000000

Binary files a/SCER_Certification_Program/images/scer_rating_conformance.png and /dev/null differ

diff --git a/SPEC.md b/SPEC.md

new file mode 100644

index 0000000..1011226

--- /dev/null

+++ b/SPEC.md

@@ -0,0 +1,195 @@

+---

+version: 0.0.1

+---

+# Software Carbon Efficiency Rating (SCER) Specification

+

+## Introduction

+

+

+In the context of global digital transformation, the role of software in contributing to carbon emissions has become increasingly significant. This necessitates the development of standardized methodologies for assessing the environmental impact of software systems.

+

+Rationale:

+

+- The Rising Carbon Footprint of Software: The digitization of nearly every aspect of modern life has led to a surge in demand for software solutions, subsequently increasing the energy consumption and carbon emissions of the IT sector.

+- The Need for a Unified Approach: Currently, the lack of a standardized system for labeling the carbon efficiency of software products hinders effective management and reduction of carbon footprints across the industry.

+

+This document aims to establish the Software Carbon Efficiency Rating (SCER) Specification, a standardized framework for labeling the carbon efficiency of software systems. The SCER Specification aims to serve as a model for labeling software products according to their Software Carbon Intensity (SCI), and it is adaptable for different software categories.

+

+

+## Scope

+

+This specification provides a framework for displaying, calculating, and verifying software carbon efficiency labels. By adhering to these requirements, software developers and vendors can offer consumers a transparent and trustworthy method for assessing the environmental impact of software products.

+

+It outlines the label format, presentation guidelines, display requirements, computation methodology used to determine the software's carbon efficiency, and the requirements for providing supporting evidence to demonstrate the accuracy of the carbon efficiency claims.

+

+This specification is intended for a broad audience involved in the creation, deployment, or use of software systems, including but not limited to:

+- Software developers

+- IT professionals

+- Policy-makers

+- Business leaders

+

+

+## Normative references

+

+ISO/IEC 21031:2024

+Information technology — Software Carbon Intensity (SCI) specification.

+

+ISO/IEC 40500

+Information technology — W3C Web Content Accessibility Guidelines (WCAG) 2.0.

+

+ISO/IEC 18004:2024

+Information technology — Automatic identification and data capture techniques — QR code bar code symbology specification.

+

+## Terms and definitions

+

+For the purposes of this document, the following terms and definitions apply.

+

+ISO and IEC maintain terminological databases for use in standardization at the following addresses:

+- ISO Online browsing platform: available at https://www.iso.org/obp

+- IEC Electropedia: available at http://www.electropedia.org/

+

+> [!NOTE]

+> TODO: Update these definitions

+

+- Software Application: TBD

+- Software Carbon Efficiency: TBD

+- Software Carbon Intensity: TBD

+- Carbon: TBD

+- Functional Unit: TBD (From SCI)

+- Manifest File: TBD

+- QR Code: TBD

+

+

+The following abbreviations are used throughout this specification:

+

+> [!NOTE]

+> TODO: Update these abbreviations

+- SCI

+- SCER

+

+> [!NOTE]

+> For ease of ref, removed in final spec.

+> - Requirements – shall, shall not

+> - Recommendations – should, should not

+> - Permission – may, need not

+> - Possibility and capability – can, cannot

+

+## Core Requirements

+

+### Ease of understanding

+A label that is hard to understand or requires expertise unavailable to most software consumers would defeat the purpose of adding transparency and clarity.

+

+SCER labels shall be uncluttered and have a clear and simple design that Shall be easily understood.

+

+### Ease of Verification

+The SCER label Shall make it easy for a consumer of a software application to verify any claims made.

+

+Consumers should have all the information they need to verify any claims made on the label and to ensure the underlying calculation methodology or any related specification has been followed accurately.

+

+### Accessible

+The SCER label and format shall be accessible to and meet accessibility specifications.

+

+### Language

+The SCER label Should be written using the English language and alphabet.

+

+## Calculation Methodology

+

+The SCI shall be used as the calculation methodology for the SCER label.

+

+Any computation of a SCI score for the SCER label SHALL adhere to all requirements of the SCI specification.

+

+> Note: SCI is a Software Carbon Efficiency Specification computed as an "Carbon per Functional Unit" of a software product. For example, Carbon per Prompt for a Large Language Model.

+

+## Presentation Guidelines

+

+Components of a SCER Label

+

+### SCI Score:

+The presentation of the SCI score Shall follow this template

+

+`[Decimal Value] gCO2eq per [Functional Unit]`

+

+- Where `[Decimal Value]` is the SCI score itself

+- Where the common term `Carbon` Shall be used to represent the more technical term `CO2e` (Carbon Dioxide Equivalent)

+- The symbol `/` Shall Not be used in place of `Per`

+- Where `[Functional Unit]` is text describing the Functional Unit as defined in the SCI calculation for this software application

+

+### SCI Version

+The SCI version Should be visible, even in small sizes.

+

+The SCI version Shall describe which version of the SCI specification this SCER label complies with and have the following format:

+

+`[Short Name] [Version]`

+

+- Where `[Short Name]` is the abbreviated version of the SCI specification this SCER label is representing

+- Where `[Version]` is the official SCI specification version this label refers to.

+

+For example:

+- `SCI 1.1`

+- `SCI AI 1.0`

+

+### QR Code

+The QR Code Shall be a URL represented as a QR code as per ISO/IEC 18004:2024

+

+The URL Shall point to a publicly accessible website where you can download a manifest file that meets the requirements in the *Supporting Evidence Section*

+

+The URL Shall Not require a login, and it Shall be publicly accessible by anonymous users or non-human automated bots/scripts.

+

+## Display Requirements

+

+The SCER Label Shall conform to this layout:

+

+> [!NOTE]

+> This is a WIP, the current design doesn't meet size flexibilty requirements.

+

+

+

+- Color: ??

+- Size: ??

+- Placement: ??

+- Font: ??

+- Example: ??

+

+## Supporting Evidence

+Per the presentation guidelines, the SCER label will link to a manifest file that provides evidence to support any claims made on the label.

+

+The manifest file Shall meet three criteria to pass as supporting evidence.

+

+### Conformance

+Evidence that the underlying SCI requirements have been met in the computation of the SCI score.

+

+The Manifest File Shall clearly describe the Software Boundary per the SCI specification.

+

+The Manifest File Shall follow the Impact Manifest Protocol Standard for communicating environmental impacts.

+

+The Manifest File should use granular data that aligns with SCI recommendations.

+

+### Correctness

+Correctness is confirming the numbers in the manifest file match the information on the SCER label.

+

+The Manifest File shall have an aggregate value for the SCI score that matches the reported score on the SCER label.

+

+The Manifest File shall have a Functional Unit that matches the reported Functional Unit on the SCER label.

+

+### Verification

+Verification is the act of confirming the evidence supports the claim.

+

+Verification of a SCER label Shall be possible using open source software and open data.

+

+Verification Shall be free for the end user and Shall Not require purchasing licenses for software or data or logging into external systems.

+

+If Verification requires access to data, that data Shall also be publicly available and free to use.

+

+

+### 4. Appendices

+

+Supporting documents, example calculations, and reporting templates.

+

+---

+

+### 5. References

+

+List of references used in the creation of the SCER Specification.

+

+---

+

diff --git a/Software_Carbon_Efficiency_Rating(SCER)/Discussions.md b/Software_Carbon_Efficiency_Rating(SCER)/Discussions.md

deleted file mode 100644

index 88f4b75..0000000

--- a/Software_Carbon_Efficiency_Rating(SCER)/Discussions.md

+++ /dev/null

@@ -1,49 +0,0 @@

-## Discussions

-1. Different standard rating programs call for different rating scope, calculation, and algorithm, etc. Some represent the ratings using number scores (e.g. EnergyStar), some using alphabetic letters (NutriScore). Therefore, in order for SCER to be widely adopted, and be flexible enough to fit a wide range of use case scenarios, the design of the SCER spec shall adopt the generic and modular approach for standardization, and enable domain experts from different industry verticals to define category-specific SCER standard, based on the generic SCER spec.

-2. SCI addressed the score part, but lacking **relative rating specification**. Therefore, SCER is supplementing SCI spec to provide an easy-to-understand rating mechanism. SCER also defines the standard specification for software categorization, benchmark definition, and rating definition. These are used as guidelines for users of SCER spec to create their own category-specific SCER specifications.

-2. With respect to the use of SCER spec, it involves data collection and publication of the ratings for various types or categories of software. For instance, refer to [MLPerf](https://mlcommons.org/) and [Geekbench](https://www.geekbench.com/), both of which defined a standard set of workload, in the case of MLPerf, the workload is open sourced, in the case of Geekbench, the workload is close sourced. Both of them provide a standard set of workload and benchmarks. They also provide a means of data collection and intuitive result publication. What are the directions of SCER in this respect? Do we want to define the spec so that other people can use it to define their own category specific SCER spec, or do we create a SCER plaform that's more like MLPerf or Geekbench where benchmarks and data collection of workload are crowd-sourced, and the results are published on a central SCER platform?

-1. House-keeping item: Confirming CLA (Contributor License Agreements) handling:

- - GitHub Integration: There are tools like CLA assistant that integrate with GitHub to manage CLA confirmations. When a contributor submits a pull request, they are prompted to agree to the CLA with a single click if they haven't done so already for that project.

- - Git Signed-off-by Statement: In certain projects, especially those using Git, a contributor might use the signed-off-by statement in their commits as an indication that they agree to the terms of the CLA, though this method is less formal and typically used in conjunction with another method.

-

-

-## References

-This References section demonstrates cases where different rating, labelling systems are used for different use cases and industry verticals.

-

-### EnergyStar:

-

-To earn the ENERGY STAR, eligible commercial buildings must earn an 1–100 ENERGY STAR score of 75 or higher—indicating that they operate more efficiently than at least 75% of similar buildings nationwide. Before applying, a building's application must be verified by a Professional Engineer or Registered Architect.

-

-

-

-

-

-### Nutri-Score:

-Nutri-Score is a front-of-package nutritional label that converts the nutritional value of food and beverages into a simple overall score. It is based on a scale of five colors and letters:

-- A: Green to represent the best nutritional quality

-- B: Light green, meaning it's still a favorable choice

-- C: Yellow, a balanced choice

-- D: Orange, less favorable

-- E: Dark orange to show it is the lowest

-

-The Nutri-Score calculation pinpoints the nutritional value of a product based on the ingredients. It takes into account both positive points (fiber content, protein, vegetables, fruit, and nuts) and negative points (kilojoules, fat, saturated fatty acids, sugar, and salt).

-

-The Nutri-Score is calculated per 100g or 100ml. The goal of the Nutri-Score is to influence consumers at the point of purchase to choose food products with a better nutritional profile, and to incentivize food manufacturers to improve the nutritional quality of their products.

-

-

-

-### CDP:

-[CDP](https://www.cdp.net/en/info/about-us) (formerly Carbon Disclosure Project): A global disclosure system for companies, cities, states, and regions to manage their environmental impacts.

-- Data collection as a form of company disclosure: CDP provides guide that covers the key steps to disclose as a company including setting up a CDP account, responding to the CDP questionnaire(s), and receiving a CDP score.

-- A CDP score is a snapshot of a company’s environmental disclosure and performance. CDP's scoring methodology is fully aligned with regulatory boards and standards, and provides comparability in the market.

-

-

-

-### LEED

-LEED stands for Leadership in Energy and Environmental Design. It is the most widely used green building rating system in the world. LEED is an environmentally oriented building certification program run by the U.S. Green Building Council (USGBC).

-LEED provides a framework for healthy, efficient, and cost-saving green buildings. It aims to improve building and construction project performance across seven areas of environmental and human health.

-

-

-To achieve LEED certification, a project earns points by adhering to prerequisites and credits that address carbon, energy, water, waste, transportation, materials, health and indoor environmental quality. Projects go through a verification and review process by GBCI and are awarded points that correspond to a level of LEED certification: **Certified (40-49 points), Silver (50-59 points), Gold (60-79 points) and Platinum (80+ points)**.

-

\ No newline at end of file

diff --git a/Software_Carbon_Efficiency_Rating(SCER)/SCER for Database Server Software.md b/Software_Carbon_Efficiency_Rating(SCER)/SCER for Database Server Software.md

deleted file mode 100644

index 70d6846..0000000

--- a/Software_Carbon_Efficiency_Rating(SCER)/SCER for Database Server Software.md

+++ /dev/null

@@ -1,186 +0,0 @@

-# SCER Specification for Relational Database Server Software

-

-## Version 0.1

-

-### Abstract

-

-The Software Carbon Efficiency Rating (SCER) for Relational Database Server Software provides a framework for assessing the carbon efficiency of database management systems (DBMS) based on energy consumption and operational efficiency.

-

-### Table of Contents

-

-1. [Introduction](#1-introduction)

-2. [Objective](#2-objective)

-3. [Terminology](#3-terminology)

-4. [Scope](#4-scope)

-5. [Software Categorization](#5-software-categorization)

-6. [Benchmarking](#6-benchmarking)

-7. [Rating System](#7-rating-system)

-8. [Rating Calculation Algorithm](#8-rating-calculation-algorithm)

-9. [Compliance and Verification](#9-compliance-and-verification)

-10. [Future Directions](#10-future-directions)

-

----

-

-### 1. Introduction

-

-Database servers are integral to IT infrastructure with substantial energy footprints. Enhancing their carbon efficiency is vital for minimizing the environmental impact of data-centric operations worldwide.

-

-### 2. Objective

-

-To define and implement a standard that measures and rates the carbon efficiency of relational database server software, encouraging industry progression towards more sustainable practices.

-

-### 3. Terminology

-

-- **SCER**: Software Carbon Efficiency Rating

-- **DBMS**: Database Management System

-- **OPS/Watt-hour**: Operations Per Second per Watt

-- **Carbon Footprint**: The total CO2e emissions associated with the DBMS across its lifecycle.

-

-### 4. Scope

-

-This standard applies to relational database server software encompassing open-source and proprietary systems.

-

-### 5. Software Categorization

-

-Database server software is categorized based on:

-

-- Scale: small, medium, large

-- Use case: transactional, analytical, hybrid

-- Deployment model: on-premises, cloud-based, hybrid

-

-### 6. Benchmarking

-

-Benchmarking focuses on:

-

-- **OPS/Watt-hour**: Database operations executed per watt hour of power consumed.

-- **Transaction Efficiency**: Energy consumed per completed transaction.

-- **Query Optimization**: Energy cost of executing complex queries.

-

-### 7. Rating System

-

-SCER classifications:

-

-- **A**: Highly efficient operations (>X OPS/Watt-hour), advanced query optimization, low energy per transaction.

-- **B**: Moderately efficient ([Y-X] OPS/Watt-hour), standard query optimization, average energy per transaction.

-- **C**: Less efficient (X Streams/Watt-hour), high data transfer efficiency, and optimal user engagement.

-- **B**: Moderately efficient ([Y-X] Streams/Watt-hour), moderate data transfer efficiency, and user engagement.

-- **C**: Less efficient (

- **N**: Not similar

-

-- Relational Database Server Category Example:

-

- | Software Applications| Purpose and Functionality | Platform and Deployment |End User Base|

- | -------- | :---------:| :---------:|:---------:|

- | MiSQL | S | S |S |

- | PSQL | S | S |S |

- | SQLite | S | **N** |S |

-

- In this example, SQLite is a light weight database which is mostly used and deployed in mobile phone platforms that are significantly different from MiSQL and PostgresSQL, so SQLite should not belong in this category, and should not be used for SCER rating in this category.

-

-##### 2.1.2 Extended Components of a Software Categorization

-

-The following aspects may be extended in determining if the software application are in the same category:

-

-- **Type of License:** Differentiating software based on open-source or proprietary status and licensing models.

- - **Open Source vs. Proprietary:** Linux kernel as open-source software vs. Microsoft Windows as proprietary software.

- - **Licensing Model:** Subscription-based model like Adobe Creative Cloud versus a one-time purchase software like Final Cut Pro.

- - **Example Categories:**

- - **Open Source:** Mozilla Firefox: Open-source web browser. LibreOffice: Open-source office suite.

- - **Proprietary:** Microsoft Office: Proprietary office suite. Adobe Acrobat: Proprietary PDF solution.

-

-- **Technical Complexity:** Evaluating the software's architecture and dependencies.

- - **Architecture:** Docker containers showcasing microservices architecture.

- - **Dependencies:** Node.js applications often depend on numerous packages from npm (node package manager).

- - **Example Categories:**

- - **Data Processing:** Hadoop: Framework for distributed storage and processing of large data sets. Elasticsearch: Search and analytics engine for all types of data.

- - **Content Management Systems:** Joomla: Content management system for web content. Drupal: Content management system for complex websites.

-

-- **Integration and Ecosystem:** Assessing compatibility and ecosystem integration.

- - **Compatibility:** Slack integrates with numerous other productivity tools like Trello, Asana, and Google Drive.

- - **Ecosystem:** Apple’s iOS apps that are part of the broader Apple ecosystem, designed to work seamlessly with other Apple devices and services.

- - **Example Categories:**

- - **Customer Relationship Management (CRM):** Salesforce: CRM solution with extensive integration capabilities. HubSpot CRM: Inbound marketing, sales, and service software with integration features.

- - **Development Tools:** JetBrains IntelliJ IDEA: Integrated development environment for software development. Microsoft Visual Studio: Comprehensive development environment with extensive integrations.

-

-- **Regulatory and Compliance Considerations:** Ensuring compliance with applicable regulations and standards.

- - **Healthcare Software:** HIPAA-compliant patient management systems like Cerner.

- - **Financial Software:** SEC-compliant trading platforms like TD Ameritrade.

- - **Example Categories:**

- - **Financial Services:** Thomson Reuters Eikon: Financial data and analytics tool. FactSet: Financial data and software for investment professionals.

- - **Telecommunications:** Amdocs: Software and services for communications, media, and financial services providers. Ericsson: Network software for telecom operators.

-

-- **Feedback and Market Recognition:** Using market recognition and user feedback for category validation.

- - **User Reviews:** Yelp for restaurant and business reviews.

- - **Awards and Recognition:** Autodesk AutoCAD receiving awards for its CAD design software capabilities.

- - **Example Categories:**

- - **Web Browsers:** Google Chrome: Highly rated for speed and integration with Google services. Mozilla Firefox: Praised for privacy features and open-source development.

- - **E-Commerce Platforms:** Shopify: Widely recognized platform for creating online stores. Magento: Renowned open-source e-commerce platform.

-

-- **Industry Vertical:** Classifying software according to the relevant industry vertical.

- - **Healthcare:** Epic Systems for electronic health records management.

- - **Finance:** QuickBooks for accounting in small to mid-sized businesses.

- - **Example Categories:**

- - **Healthcare:** McKesson: Medical supplies ordering and healthcare information technology. Allscripts: Provider of electronic record, practice management, and other clinical solutions.

- - **Education:** Blackboard: Learning management system for education providers. Canvas: Web-based learning management system for educational institutions.

-

-- **Consistent Review and Update:**

- - **Periodic Review:** Google Chrome, which releases updates frequently to reflect the latest web standards and security practices.

- - **Benchmark against Peers:** Comparing Microsoft Office 365 to other productivity suites like Google Workspace for feature set and market position.

- - **Example Categories:**

- - **Operating Systems:** Windows 10: Regular feature updates and security patches. macOS: Annual updates with feature enhancements and security improvements.

- - **Security Software:** Symantec Norton: Consistent updates for virus definitions and security features. McAfee: Frequent updates to maintain security and performance standards.

-

-- **Size of the Software:** Many softwares are comparatively very big in size and this may get multiplied with each install by different users.

- - **Software Size itself:** Software size and depending on the type of installation required for the application, it's size can have an huge impact on the carbon emissions generated by the software.

- - **Dependencies:** Node.js or Angular applications depend on numerous packages and that also needs to be downloaded with each installation.

- - **Examples:**

- - **Desktop/Mobile Applications:** Microsoft Office 365, Visual Studio, OS, etc.

- - **Web Applications:** Microsoft 365 Apps, Open Cloud Architecture, Virtual work environment, etc.

-

-Note:

-1. Should SaaS (Software s a Service) be part of the software categorization? It most likely is part of the software categorization for the SCER purpose, such as music streaming service. It seems that SCER really means software *induced* carbon efficiency rating - meaning, the carbon efficiencies due to running of the software application, regardless if the software is run as a service, or run on a desktop.

-2. Should these aspects of categorizing software be OR or AND relationship? Does all software applications belonging to the same category have to have the same answer for each aspect? For example, should microsoft office and libre office belong to the same office suite software category for the purpose of SCER rating, even though one is proprietary, the other is open source?

-3. Are these too heavy? Any other considerations missing?

-

----

-

-#### 2.2 Benchmarking Specification

-

-This section of the SCER Specification provides a detailed description of the benchmarking process for measuring the carbon efficiency of software applications. It includes the selection of appropriate metrics, the definition of the benchmarking workload, the necessary test environment setup, and the methodology for executing the benchmarks.

-

-##### 2.2.1 Definition of Benchmarks

-

-Benchmarks serve as the comparative performance standards against which software applications are evaluated for their [Software Carbon Intensity (SCI)](https://github.com/Green-Software-Foundation/sci/blob/main/Software_Carbon_Intensity/Software_Carbon_Intensity_Specification.md) scores or [Social Cost of Carbon (SCC)](https://www.brookings.edu/articles/what-is-the-social-cost-of-carbon/#:~:text=The%20social%20cost%20of%20carbon%20(SCC)%20is%20an%20estimate%20of,a%20ton%20of%20carbon%20emissions.) dollars. The default measurement of benchmarks is in SCI scores.

-

-##### 2.2.2 Unit of Software Function (USF)

-

-The "Unit of Software Function" or USF refers to the most commonly used function of the software, such as:

-

-- **Read/Write Operations** for database software

-- **Rendering a Frame** for graphical software or video games

-- **Handling a Web Request** for web servers

-- **Processing a Data Block** for data processing software

-

-##### 2.2.3 Standardized Tests or Benchmarking Workload

-

-For each category of software, a standardized test can be developed to measure the functional utility of the software, which is then used to calculate its SCI score. The tests will simulate the most commonly used function of the software.

-

-The workload used for benchmarking should be representative of real-world scenarios to ensure the results are relevant and actionable.

-

-- **Workload Profiles**: Detailed descriptions of the different types of workload the software will be subjected to during the benchmarking process.

-- **Workload Metrics**: The quantitative measures of each workload profile, such as the number of simultaneous users, data size, and operation mix.

-

-Example Workload Profiles:

-- For a video streaming service, the workload might simulate streaming at various resolutions.

-- For a customer relationship management (CRM) system, the workload could involve a mix of database reads/writes, report generations, and user queries.

-

-##### 2.2.4 SCI Score Formula

-

-The SCI Score for a software application will be calculated as follows:

-

-$$\ SCI\ Score = \frac{CO2e\ emissions}{USF} \$$

-

-Where CO2e emissions are measured in kilograms and USF is determined by the benchmark test.

-

-Default measurement is in SCI but other metrics can be used to base the SCER rating, such as kWh, SCC, SCI, CO2e, etc.

-

-##### 2.2.5 Test Environment

-

-The test environment must be standardized to minimize variability in the benchmark results.

-

-- **Hardware Specifications**: A description of the physical or virtual hardware on which the software will be tested, including processor, memory, storage, and networking details.

-- **Software Configuration**: The operating system, middleware, and any other software stack components should be specified and standardized.

-

-Example Test Environments:

-- A specified cloud instance type with a defined operating system and resource allocation.

-- A physical server with controlled ambient temperature and power supply to ensure consistent results.

-

-##### 2.2.6 Test Methodology

-

-A detailed, repeatable method for conducting the benchmarks.

-

-- **Setup Instructions**: Step-by-step guidance to prepare the test environment, including installation and configuration of the software and tools.

-- **Execution Steps**: A sequential list of actions to perform the benchmark, including starting the software, initiating the workload, monitoring performance, and recording results.

-- **Result Collection and Analysis**: Guidelines for gathering the data, ensuring its integrity, and analyzing it to derive meaningful insights.

-

-Example Test Methodology:

-- For a web application, steps might include setting up a load generator, executing predetermined tasks, and measuring response times and energy consumption.

-- For a data processing application, it might involve running specific data sets through the software and measuring the time and energy required for processing.

-

-Each of these components should be defined with sufficient detail to enable consistent replication of the benchmarking process across different environments and software versions. This standardization is crucial for ensuring that the SCER ratings are reliable and comparable across different software applications.

-

-In summary, the following table can serve as a template or checklist when defining benchmarks:

-

-| Benchmark Measurement| Benchmark Workload | Test Environment |Test Methodology|

-| -------- | ---------| ---------|---------|

-| kWhSCCSCI (default) CO2e, or Other | Unit of Software Function (USF) Number of times to execute USF | Hardware specificationsSoftware Configuration |Setup InstructionsExecution StepsResult Collection and Analysis |

-

----

-*Benchmark measurement shall be carbon related, by default is in SCI scores. However, if the benchmarking is done in a controlled environment, all other variables related to carbon emission are being equal, then benchmarking can be measured in energy consumed (e.g. kWh) rather than in SCI.*

-

-#### 2.3 Rating Specification

-##### 2.3.1 **Rating Components:**

-The common components of a rating specification includes the following:

-1. Rating Scale: Define the rating scale and the criteria for each rating level.

-2. Evaluation Methodology: Detail the evaluation methodology for converting benchmark results to ratings.

-3. Reporting and Disclosure: Standardized format for rating disclosure.

-

-##### 2.3.2 **Rating Algorithm:**

-To compute the SCER (Software Carbon Efficiency Rating) based on SCI Score performance in relation to peer software within the same category.

-

-1. **Data Collection**: Gather SCI Scores from multiple software applications within the same category.

-2. **Normalization**: Normalize SCI Scores to create a common scale.