diff --git a/python-recipes/semantic-cache/03_context_enabled_semantic_caching.ipynb b/python-recipes/semantic-cache/03_context_enabled_semantic_caching.ipynb

new file mode 100644

index 0000000..d2e3d6a

--- /dev/null

+++ b/python-recipes/semantic-cache/03_context_enabled_semantic_caching.ipynb

@@ -0,0 +1,1292 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "vrbm9EkW-kRo"

+ },

+ "source": [

+ "\n",

+ "\n",

+ "# Context-Enabled Semantic Caching with Redis\n",

+ "\n",

+ "\n",

+ " "

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "4i9pSolc896M"

+ },

+ "source": [

+ "## What is Context-Enabled Semantic Caching?\n",

+ "\n",

+ "\n",

+ "Most caching systems today are **exact match**. They only return results if the query matches a key 1:1. \n",

+ "Ask **“What’s the weather in NYC?”**, and the system might cache and return that exact string. \n",

+ "But change it slightly—**“Is it raining in New York?”**—and you miss the cache completely.\n",

+ "\n",

+ "**Semantic caching** fixes that. It uses **vector embeddings** to find conceptually similar queries. \n",

+ "So whether a user asks “forecast for NYC,” “weather in Manhattan,” or “umbrella needed in NYC?”, they all hit the **same cached result** if the meaning aligns.\n",

+ "\n",

+ "But here’s the problem: \n",

+ "Even if you nail semantic similarity, **not all users want the same level of detail or format**. \n",

+ "With LLMs storing more history and memory on users, this is a chance to tailor responses to be fully personalized at fractions of the cost.\n",

+ "\n",

+ "That’s where **Context-Enabled Semantic Caching (CESC)** comes in.\n",

+ "\n",

+ "---\n",

+ "\n",

+ "\n",

+ "\n",

+ "### The Business Problem\n",

+ "\n",

+ "Enterprise LLM applications face three critical challenges:\n",

+ "- **Cost**: GPT-4o calls can cost $0.0025-0.01 per 1K tokens\n",

+ "- **Latency**: Cold LLM calls take 2-5 seconds, hurting user experience \n",

+ "- **Relevance**: Generic responses don't account for user roles, preferences, or context\n",

+ "\n",

+ "### Why It Matters\n",

+ "\n",

+ "| Challenge | Traditional Caching | Semantic Caching | CESC (Personalized) |\n",

+ "|----------------|-----------------------------|----------------------------------------|-------------------------------------------|\n",

+ "| **Match Type** | Exact string | Vector similarity | Vector + user context |\n",

+ "| **Relevance** | Low | Medium | High |\n",

+ "| **Latency** | Fast | Fast | Still fast (cached + lightweight model) |\n",

+ "| **Cost** | Low | Low | Low (personalization avoids full GPT-4o-mini) |\n",

+ "\n",

+ "\n",

+ "\n",

+ "---\n",

+ "\n",

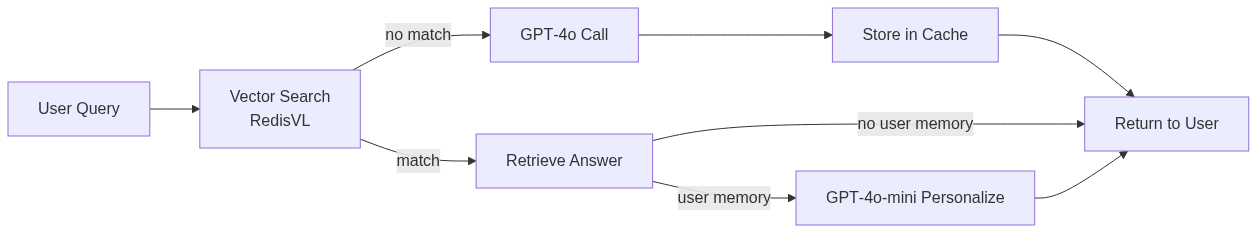

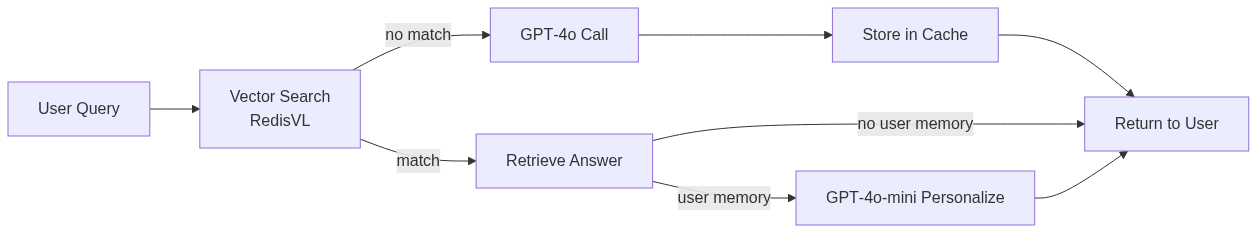

+ "### Our Solution Architecture\n",

+ "\n",

+ "CESC creates a three-tier response system:\n",

+ "1. **Cold Start**: Fresh LLM call for new queries (expensive, slow, but comprehensive)\n",

+ "2. **Cache Hit**: Instant return of semantically similar cached responses (fast, cheap, generic)\n",

+ "3. **Personalized Cache Hit**: Lightweight model personalizes cached content using user memory (balanced speed/cost/relevance)\n",

+ "\n",

+ "Let's see this in action with a real enterprise IT support scenario.\n",

+ "[](https://mermaid.live/edit#pako:eNpdkU1uwjAQha9izTpQfkyAqEJCqdQNlSBpWTRh4SYDiRTbaOKUAkLqFXrFnqROgmjVWdnz5n1-8pwh0SmCB9tCH5JMkGGLIFbM1ip6KZHYqkI6blinM2NhtMbEaGIhCkqy-ze6mwWY5uV6sWk9oZ1jSjMpTJI1nkX0uHz-_vzimvmiKFqQH4UWgyxXtplkeHX7jRhEAZqKFDOa1Qn-on-583qKcnxHNlfl4TY2vyao6uwSpaZjS_0j_9eWt4wdmaucLZFKrUSRn7DNG4ADO8pT8LaiKNEBiSRFfYdzzY3BZCgxBs8eU9yKqjAxxOpifXuhXrWW4BmqrJN0tctunGqfCoMPudiRkLcuoUqRfF0pAx7vTxsIeGf4AG867Lp8POmNXT4YuLYcOILXd6ddPhzzSd8d8Snn3L04cGqe7XUn45EDdk32y5_aZTc7v_wAqpSdUg)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Install dependencies"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 1,

+ "metadata": {

+ "id": "v6g7eVRZAcFA"

+ },

+ "outputs": [],

+ "source": [

+ "# 📦 Install required Python packages\n",

+ "!pip install -q \"redisvl>=0.8.0\" sentence-transformers openai tiktoken python-dotenv redis google pandas"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Run a Redis instance\n",

+ "\n",

+ "\n",

+ "#### For Colab\n",

+ "Use the shell script below to download, extract, and install [Redis Stack](https://redis.io/docs/getting-started/install-stack/) directly from the Redis package archive."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "m04KxSuhBiOx"

+ },

+ "outputs": [],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "%%sh\n",

+ "curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg\n",

+ "echo \"deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main\" | sudo tee /etc/apt/sources.list.d/redis.list\n",

+ "sudo apt-get update > /dev/null 2>&1\n",

+ "sudo apt-get install redis-stack-server > /dev/null 2>&1\n",

+ "redis-stack-server --daemonize yes"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### For Alternative Environments\n",

+ "There are many ways to get the necessary redis-stack instance running\n",

+ "1. On cloud, deploy a [FREE instance of Redis in the cloud](https://redis.com/try-free/). Or, if you have your\n",

+ "own version of Redis Enterprise running, that works too!\n",

+ "2. Per OS, [see the docs](https://redis.io/docs/latest/operate/oss_and_stack/install/install-stack/)\n",

+ "3. With docker: `docker run -d --name redis-stack-server -p 6379:6379 redis/redis-stack-server:latest`"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "xlsHkIF49Lve"

+ },

+ "source": [

+ "## Infrastructure Setup\n",

+ "\n",

+ "We're using Redis with vector search capabilities to store embeddings and enable semantic similarity matching. This simulates a production environment where your cache would be persistent across sessions.\n",

+ "\n",

+ "**Note**: In production, you'd typically use Redis Enterprise, or a managed Redis service such as Redis Cloud or Azure Managed Redis with proper clustering, persistence, and security configurations."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 2,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "we-6LpNAByt1",

+ "outputId": "89b7e9c1-63f9-4458-cdab-0bc98b88a09e"

+ },

+ "outputs": [

+ {

+ "data": {

+ "text/plain": [

+ "True"

+ ]

+ },

+ "execution_count": 2,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

+ "source": [

+ "import os\n",

+ "import redis\n",

+ "\n",

+ "# Redis connection params\n",

+ "REDIS_HOST = os.getenv(\"REDIS_HOST\", \"localhost\")\n",

+ "REDIS_PORT = os.getenv(\"REDIS_PORT\", \"6379\")\n",

+ "REDIS_PASSWORD = os.getenv(\"REDIS_PASSWORD\", \"\")\n",

+ "\n",

+ "#\n",

+ "# Create Redis client\n",

+ "redis_client = redis.Redis(\n",

+ " host=REDIS_HOST,\n",

+ " port=REDIS_PORT,\n",

+ " password=REDIS_PASSWORD\n",

+ ")\n",

+ "\n",

+ "redis_url = f\"redis://:{REDIS_PASSWORD}@{REDIS_HOST}:{REDIS_PORT}\" if REDIS_PASSWORD else f\"redis://{REDIS_HOST}:{REDIS_PORT}\"\n",

+ "\n",

+ "# Test connection\n",

+ "redis_client.ping()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Essential Imports\n",

+ "\n",

+ "This cell imports all the key libraries needed for Context-Enabled Semantic Caching:\n",

+ "\n",

+ "**Core AI & ML:**\n",

+ "- `sentence_transformers` - For generating text embeddings using the all-MiniLM-L6-v2 model\n",

+ "- `openai` - Client libraries for both OpenAI and Azure OpenAI APIs\n",

+ "- `tiktoken` - Accurate token counting for cost calculation\n",

+ "\n",

+ "**Redis & Vector Search:**\n",

+ "- `redis` - Direct Redis client for database operations\n",

+ "- `redisvl` - Redis Vector Library for semantic search capabilities\n",

+ "- `SearchIndex` - Vector search index management\n",

+ "- `HFTextVectorizer` - Hugging Face text vectorization utilities\n",

+ "\n",

+ "**Data & Utilities:**\n",

+ "- `pandas` - Data analysis and telemetry reporting\n",

+ "- `numpy` - Numerical operations for vector handling\n",

+ "- `typing` - Type hints for better code clarity\n",

+ "- `dotenv` - Environment variable management for API keys"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 3,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stderr",

+ "output_type": "stream",

+ "text": [

+ "c:\\Users\\PhilipLaussermair\\Desktop\\Code\\Internal\\sc recipe\\redis-ai-resources\\.venv\\Lib\\site-packages\\tqdm\\auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html\n",

+ " from .autonotebook import tqdm as notebook_tqdm\n"

+ ]

+ },

+ {

+ "data": {

+ "text/plain": [

+ "True"

+ ]

+ },

+ "execution_count": 3,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

+ "source": [

+ "import os\n",

+ "import time\n",

+ "import uuid\n",

+ "import numpy as np\n",

+ "from typing import List, Dict\n",

+ "import redis\n",

+ "from sentence_transformers import SentenceTransformer\n",

+ "from redisvl.index import SearchIndex\n",

+ "from redisvl.utils.vectorize import HFTextVectorizer\n",

+ "from openai import AzureOpenAI\n",

+ "import tiktoken\n",

+ "import pandas as pd\n",

+ "from openai import AzureOpenAI, OpenAI\n",

+ "import logging\n",

+ "import sys\n",

+ "\n",

+ "from dotenv import load_dotenv\n",

+ "\n",

+ "# Load environment variables from .env file\n",

+ "# Make sure you have a .env file in the root of this project\n",

+ "\n",

+ "\n",

+ "# Suppress noisy loggers\n",

+ "logging.getLogger(\"sentence_transformers\").setLevel(logging.WARNING)\n",

+ "logging.getLogger(\"httpx\").setLevel(logging.WARNING)\n",

+ "load_dotenv()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## LLM Client Setup\n",

+ "\n",

+ "This section handles the detection and initialization of our LLM client. We support both OpenAI and Azure OpenAI with automatic detection based on available environment variables:\n",

+ "\n",

+ "- **Priority 1**: OpenAI (if `OPENAI_API_KEY` is present)\n",

+ "- **Priority 2**: Azure OpenAI (if `AZURE_OPENAI_API_KEY` + `AZURE_OPENAI_ENDPOINT` are present) \n",

+ "- **Fallback**: Exit with clear instructions if no credentials found"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 4,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "🔒 Azure OpenAI detected\n"

+ ]

+ }

+ ],

+ "source": [

+ "# Helper function to get secrets from Colab or environment variables\n",

+ "def get_secret(secret_name: str) -> str:\n",

+ " \"\"\"\n",

+ " Retrieves a secret from Google Colab's userdata if available,\n",

+ " otherwise falls back to an environment variable.\n",

+ " \"\"\"\n",

+ " try:\n",

+ " from google.colab import userdata\n",

+ " secret = userdata.get(secret_name)\n",

+ " if secret:\n",

+ " return secret\n",

+ " except (ImportError, KeyError):\n",

+ " # Not in Colab or secret not found, fall back to environment variables\n",

+ " pass\n",

+ " return os.getenv(secret_name)\n",

+ "\n",

+ "# 🔐 Simple API key detection and client setup\n",

+ "if get_secret(\"OPENAI_API_KEY\"):\n",

+ " print(\"🔒 OpenAI detected\")\n",

+ " client = OpenAI(api_key=get_secret(\"OPENAI_API_KEY\"))\n",

+ " MODEL_GPT4 = \"gpt-4o\"\n",

+ " MODEL_GPT4_MINI = \"gpt-4o-mini\"\n",

+ "elif get_secret(\"AZURE_OPENAI_API_KEY\") and get_secret(\"AZURE_OPENAI_ENDPOINT\"):\n",

+ " print(\"🔒 Azure OpenAI detected\")\n",

+ " client = AzureOpenAI(\n",

+ " azure_endpoint=get_secret(\"AZURE_OPENAI_ENDPOINT\"),\n",

+ " api_key=get_secret(\"AZURE_OPENAI_API_KEY\"),\n",

+ " api_version=get_secret(\"AZURE_OPENAI_API_VERSION\") or \"2024-05-01-preview\"\n",

+ " )\n",

+ " MODEL_GPT4 = os.getenv(\"AZURE_OPENAI_MODEL_GPT4\", \"gpt-4o\")\n",

+ " MODEL_GPT4_MINI = os.getenv(\"AZURE_OPENAI_MODEL_GPT4_MINI\", \"gpt-4o-mini\")\n",

+ "else:\n",

+ " print(\"❌ No API keys found!\")\n",

+ " print(\"Set one of the following environment variables:\")\n",

+ " print(\" OpenAI: OPENAI_API_KEY\")\n",

+ " print(\" Azure OpenAI: AZURE_OPENAI_API_KEY + AZURE_OPENAI_ENDPOINT\")\n",

+ " sys.exit(1)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Redis Vector Search Index Setup\n",

+ "\n",

+ "We're setting up a Redis search index optimized for semantic caching with vector similarity search:\n",

+ "\n",

+ "**Index Configuration:**\n",

+ "- **Algorithm**: HNSW (Hierarchical Navigable Small World) for fast approximate nearest neighbor search\n",

+ "- **Distance Metric**: Cosine similarity for semantic text comparison\n",

+ "- **Vector Dimensions**: 384 (matching our sentence-transformer model)\n",

+ "- **Storage**: Hash-based for efficient retrieval\n",

+ "\n",

+ "**Fields Stored:**\n",

+ "- `content_vector`: The 384-dimensional embedding of the cached response\n",

+ "- `content`: The original text response from the LLM\n",

+ "- `user_id`: Which user generated this cache entry\n",

+ "- `prompt`: The original query that generated this response\n",

+ "- `model`: Which LLM model was used (gpt-4o vs gpt-4o-mini)\n",

+ "- `created_at`: Timestamp for cache expiration and analytics\n",

+ "\n",

+ "This setup enables sub-millisecond similarity searches across thousands of cached responses."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 5,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "12:16:59 redisvl.index.index INFO Index already exists, overwriting.\n"

+ ]

+ }

+ ],

+ "source": [

+ "# RedisVL index configuration\n",

+ "index_config = {\n",

+ " \"index\": {\n",

+ " \"name\": \"cesc_index\",\n",

+ " \"prefix\": \"cesc\",\n",

+ " \"storage_type\": \"hash\"\n",

+ " },\n",

+ " \"fields\": [\n",

+ " {\n",

+ " \"name\": \"content_vector\",\n",

+ " \"type\": \"vector\",\n",

+ " \"attrs\": {\n",

+ " \"dims\": 384,\n",

+ " \"distance_metric\": \"cosine\",\n",

+ " \"algorithm\": \"hnsw\"\n",

+ " }\n",

+ " },\n",

+ " {\"name\": \"content\", \"type\": \"text\"},\n",

+ " {\"name\": \"user_id\", \"type\": \"tag\"},\n",

+ " {\"name\": \"prompt\", \"type\": \"text\"},\n",

+ " {\"name\": \"model\", \"type\": \"tag\"},\n",

+ " {\"name\": \"created_at\", \"type\": \"numeric\"},\n",

+ " ]\n",

+ "}\n",

+ "\n",

+ "# Create and connect the search index\n",

+ "search_index = SearchIndex.from_dict(index_config)\n",

+ "search_index.connect(redis_url)\n",

+ "search_index.create(overwrite=True)\n",

+ "\n",

+ "# Initialize embedding model and vectorizer for semantic search\n",

+ "embedding_model = SentenceTransformer(\"all-MiniLM-L6-v2\")\n",

+ "vectorizer = HFTextVectorizer(model=\"all-MiniLM-L6-v2\")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Telemetry and Token Counting\n",

+ "\n",

+ "These utilities help us measure and analyze the performance benefits of our caching system:\n",

+ "\n",

+ "**TokenCounter:**\n",

+ "- Accurately counts input/output tokens for cost calculation\n",

+ "- Uses tiktoken library with model-specific encodings\n",

+ "- Essential for measuring cost savings vs. baseline GPT-4o calls\n",

+ "\n",

+ "**TelemetryLogger:**\n",

+ "- Tracks latency, token usage, and costs for each query\n",

+ "- Categorizes responses: `miss` (cold LLM call), `hit_raw` (cache), `hit_personalized` (cache + customization)\n",

+ "- Calculates cost savings compared to always using GPT-4o\n",

+ "- Provides detailed analytics tables and summaries\n",

+ "\n",

+ "This data demonstrates the ROI of Context-Enabled Semantic Caching in real-world scenarios."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 7,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Token counter for accurate cost calculation\n",

+ "class TokenCounter:\n",

+ " def __init__(self, model_name=\"gpt-4o\"):\n",

+ " try:\n",

+ " self.encoding = tiktoken.encoding_for_model(model_name)\n",

+ " except KeyError:\n",

+ " self.encoding = tiktoken.get_encoding(\"cl100k_base\")\n",

+ "\n",

+ " def count_tokens(self, text: str) -> int:\n",

+ " if not text:\n",

+ " return 0\n",

+ " return len(self.encoding.encode(text))\n",

+ "\n",

+ "token_counter = TokenCounter()\n",

+ "\n",

+ "class TelemetryLogger:\n",

+ " def __init__(self):\n",

+ " self.logs = []\n",

+ "\n",

+ " def log(self, user_id, method, latency_ms, input_tokens, output_tokens, cache_status, response_source):\n",

+ " model = response_source # assume model name is passed as source, e.g., \"gpt-4o\" or \"gpt-4o-mini\"\n",

+ " cost = self.calculate_cost(model, input_tokens, output_tokens)\n",

+ " self.logs.append({\n",

+ " \"timestamp\": time.time(),\n",

+ " \"user_id\": user_id,\n",

+ " \"method\": method,\n",

+ " \"latency_ms\": latency_ms,\n",

+ " \"input_tokens\": input_tokens,\n",

+ " \"output_tokens\": output_tokens,\n",

+ " \"total_tokens\": input_tokens + output_tokens,\n",

+ " \"cache_status\": cache_status,\n",

+ " \"response_source\": response_source,\n",

+ " \"cost_usd\": cost\n",

+ " })\n",

+ "\n",

+ " # 💵 Real cost vs baseline cold-call cost\n",

+ " cost = self.calculate_cost(response_source, input_tokens, output_tokens)\n",

+ " baseline = self.calculate_cost(\"gpt-4o\", input_tokens, output_tokens)\n",

+ "\n",

+ " self.logs[-1][\"cost_usd\"] = cost\n",

+ " self.logs[-1][\"baseline_cost_usd\"] = baseline\n",

+ "\n",

+ " def show_logs(self):\n",

+ " return pd.DataFrame(self.logs)\n",

+ "\n",

+ " def summarize(self):\n",

+ " df = pd.DataFrame(self.logs)\n",

+ " if df.empty:\n",

+ " print(\"No telemetry yet.\")\n",

+ " return\n",

+ "\n",

+ " df[\"total_tokens\"] = df[\"input_tokens\"] + df[\"output_tokens\"]\n",

+ "\n",

+ " display(df[[\n",

+ " \"user_id\",\n",

+ " \"cache_status\",\n",

+ " \"latency_ms\",\n",

+ " \"response_source\",\n",

+ " \"input_tokens\",\n",

+ " \"output_tokens\",\n",

+ " \"total_tokens\"\n",

+ " ]])\n",

+ "\n",

+ " # Compare cold start vs personalized\n",

+ " try:\n",

+ " cold_latency = df.loc[df[\"user_id\"] == \"user_cold\", \"latency_ms\"].values[0]\n",

+ " cx_latency = df.loc[df[\"user_id\"] == \"user_withcontext\", \"latency_ms\"].values[0]\n",

+ "\n",

+ " if cx_latency < cold_latency:\n",

+ " delta = cold_latency - cx_latency\n",

+ " pct = (delta / cold_latency) * 100\n",

+ " print(f\"\\n⚡ Personalized response (user_withcontext) was faster than the plain LLM by {int(delta)} ms — a {pct:.1f}% speed boost.\")\n",

+ " else:\n",

+ " delta = cx_latency - cold_latency\n",

+ " pct = (delta / cx_latency) * 100\n",

+ " print(f\"\\n⏱️ Personalized response (user_withcontext) was {int(delta)} ms slower than the plain LLM — a {pct:.1f}% slowdown.\")\n",

+ " print(\"📌 However, it returned a tailored response based on user memory, offering higher relevance.\")\n",

+ " except Exception as e:\n",

+ " print(\"\\n⚠️ Could not compute latency comparison:\", e)\n",

+ "\n",

+ " def calculate_cost(self, model: str, input_tokens: int, output_tokens: int) -> float:\n",

+ " # Azure OpenAI pricing (per 1K tokens)\n",

+ " pricing = {\n",

+ " \"gpt-4o\": {\"input\": 0.005, \"output\": 0.015},\n",

+ " \"gpt-4o-mini\": {\"input\": 0.0015, \"output\": 0.003}\n",

+ " }\n",

+ "\n",

+ " if model not in pricing:\n",

+ " return 0.0\n",

+ "\n",

+ " input_cost = (input_tokens / 1000) * pricing[model][\"input\"]\n",

+ " output_cost = (output_tokens / 1000) * pricing[model][\"output\"]\n",

+ " return round(input_cost + output_cost, 6)\n",

+ "\n",

+ " def display_cost_summary(self):\n",

+ " df = self.show_logs()\n",

+ " if df.empty:\n",

+ " print(\"No telemetry logged yet.\")\n",

+ " return\n",

+ "\n",

+ " # Calculate savings per row\n",

+ " df[\"savings_usd\"] = df[\"baseline_cost_usd\"] - df[\"cost_usd\"]\n",

+ "\n",

+ " total_cost = df[\"cost_usd\"].sum()\n",

+ " baseline_cost = df[\"baseline_cost_usd\"].sum()\n",

+ " total_savings = df[\"savings_usd\"].sum()\n",

+ " savings_pct = (total_savings / baseline_cost * 100) if baseline_cost > 0 else 0\n",

+ "\n",

+ " # Display summary table\n",

+ " display(df[[\n",

+ " \"user_id\", \"cache_status\", \"response_source\",\n",

+ " \"input_tokens\", \"output_tokens\", \"latency_ms\",\n",

+ " \"cost_usd\", \"baseline_cost_usd\", \"savings_usd\"\n",

+ " ]])\n",

+ "\n",

+ " # 💸 Compare cost of plain LLM vs personalized\n",

+ " try:\n",

+ " cost_plain = df.loc[df[\"user_id\"] == \"user_cold\", \"cost_usd\"].values[0]\n",

+ " cost_personalized = df.loc[df[\"user_id\"] == \"user_withcontext\", \"cost_usd\"].values[0]\n",

+ "\n",

+ " print(f\"\\n🧾 Total Cost of Plain LLM Response: ${cost_plain:.4f}\")\n",

+ " print(f\"🧾 Total Cost of Personalized Response: ${cost_personalized:.4f}\")\n",

+ "\n",

+ " if cost_personalized < cost_plain:\n",

+ " delta = cost_plain - cost_personalized\n",

+ " pct = (delta / cost_plain) * 100\n",

+ " print(f\"\\n💡 Personalized response (user_withcontext) was cheaper than plain LLM by ${delta:.4f} — a {pct:.1f}% cost improvement.\")\n",

+ " else:\n",

+ " delta = cost_personalized - cost_plain\n",

+ " pct = (delta / cost_personalized) * 100\n",

+ " print(f\"\\n⏱️ Personalized response (user_withcontext) was ${delta:.4f} more expensive than plain LLM — a {pct:.1f}% cost increase.\")\n",

+ " print(\"📌 However, it returned a tailored response based on user memory, offering higher relevance.\")\n",

+ " except Exception as e:\n",

+ " print(\"\\n⚠️ Could not compute cost comparison:\", e)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## LLM Client: The Intelligence Engine\n",

+ "\n",

+ "The `LLMClient` class serves as our interface to LLM services, handling both fresh content generation and response personalization:\n",

+ "\n",

+ "### Key Components:\n",

+ "- **Dual Model Strategy**: Uses GPT-4o for comprehensive responses and GPT-4o-mini for efficient personalization\n",

+ "- **Token Counting**: Tracks usage for accurate cost calculation and telemetry\n",

+ "- **Response Personalization**: Adapts cached responses using user context and memory\n",

+ "- **Performance Monitoring**: Measures latency and token consumption for each operation\n",

+ "\n",

+ "### Personalization Process:\n",

+ "When a cache hit occurs for a user with stored context, the system:\n",

+ "1. Takes the cached response as a baseline\n",

+ "2. Incorporates user-specific preferences, goals, and history\n",

+ "3. Generates a personalized variant using the lightweight GPT-4o-mini model\n",

+ "4. Maintains the core information while adapting tone and specific recommendations"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 8,

+ "metadata": {

+ "id": "i3LSCGr3E1t8"

+ },

+ "outputs": [],

+ "source": [

+ "class LLMClient:\n",

+ " def __init__(self, client, token_counter, gpt4_model=\"gpt-4o\", gpt4mini_model=\"gpt-4o-mini\"):\n",

+ " self.client = client\n",

+ " self.token_counter = token_counter\n",

+ " self.gpt4_model = gpt4_model\n",

+ " self.gpt4mini_model = gpt4mini_model\n",

+ "\n",

+ " def call_llm(self, prompt: str, model: str = \"gpt-4o\") -> Dict:\n",

+ " \"\"\"Call LLM model and track latency, token usage, and cost\"\"\"\n",

+ " start_time = time.time()\n",

+ " response = self.client.chat.completions.create(\n",

+ " model=model,\n",

+ " messages=[{\"role\": \"user\", \"content\": prompt}],\n",

+ " temperature=0.7,\n",

+ " max_tokens=200\n",

+ " )\n",

+ " latency = (time.time() - start_time) * 1000\n",

+ "\n",

+ " output = response.choices[0].message.content\n",

+ " input_tokens = self.token_counter.count_tokens(prompt)\n",

+ " output_tokens = self.token_counter.count_tokens(output)\n",

+ "\n",

+ " return {\n",

+ " \"response\": output,\n",

+ " \"latency_ms\": round(latency, 2),\n",

+ " \"input_tokens\": input_tokens,\n",

+ " \"output_tokens\": output_tokens,\n",

+ " \"model\": model\n",

+ " }\n",

+ "\n",

+ " def call_gpt4(self, prompt: str) -> Dict:\n",

+ " return self.call_llm(prompt, model=self.gpt4_model)\n",

+ "\n",

+ " def call_gpt4mini(self, prompt: str) -> Dict:\n",

+ " return self.call_llm(prompt, model=self.gpt4mini_model)\n",

+ "\n",

+ " def personalize_response(self, cached_response: str, user_context: Dict, original_prompt: str) -> Dict:\n",

+ " context_prompt = self._build_context_prompt(cached_response, user_context, original_prompt)\n",

+ " start_time = time.time()\n",

+ " response = self.client.chat.completions.create(\n",

+ " model=self.gpt4mini_model,\n",

+ " messages=[\n",

+ " {\"role\": \"system\", \"content\": context_prompt},\n",

+ " {\"role\": \"user\", \"content\": \"Please personalize this cached response for the user. Keep your response under 3 sentences.\"}\n",

+ " ]\n",

+ " )\n",

+ " latency = (time.time() - start_time) * 1000 # ms\n",

+ " reply = response.choices[0].message.content\n",

+ "\n",

+ " input_tokens = response.usage.prompt_tokens\n",

+ " output_tokens = response.usage.completion_tokens\n",

+ " total_tokens = response.usage.total_tokens\n",

+ "\n",

+ " return {\n",

+ " \"response\": reply,\n",

+ " \"latency_ms\": round(latency, 2),\n",

+ " \"input_tokens\": input_tokens,\n",

+ " \"output_tokens\": output_tokens,\n",

+ " \"tokens\": total_tokens,\n",

+ " \"model\": self.gpt4mini_model\n",

+ " }\n",

+ "\n",

+ " def _build_context_prompt(self, cached_response: str, user_context: Dict, prompt: str) -> str:\n",

+ " context_parts = []\n",

+ " if user_context.get(\"preferences\"):\n",

+ " context_parts.append(\"User preferences: \" + \", \".join(user_context[\"preferences\"]))\n",

+ " if user_context.get(\"goals\"):\n",

+ " context_parts.append(\"User goals: \" + \", \".join(user_context[\"goals\"]))\n",

+ " if user_context.get(\"history\"):\n",

+ " context_parts.append(\"User history: \" + \", \".join(user_context[\"history\"]))\n",

+ " context_blob = \"\\n\".join(context_parts)\n",

+ " return f\"\"\"You are a personalization assistant. A cached response was previously generated for the prompt: \"{prompt}\".\n",

+ "\n",

+ "Here is the cached response:\n",

+ "\\\"\\\"\\\"{cached_response}\\\"\\\"\\\"\n",

+ "\n",

+ "Use the user's context below to personalize and refine the response:\n",

+ "{context_blob}\n",

+ "\n",

+ "Respond in a way that feels tailored to this user, adjusting tone, content, or suggestions as needed. Keep your response under 3 sentences no matter what.\n",

+ "\"\"\""

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Context-Enabled Semantic Cache: The Core Engine\n",

+ "\n",

+ "The `ContextEnabledSemanticCache` class orchestrates the entire caching and personalization workflow:\n",

+ "\n",

+ "### Architecture Overview:\n",

+ "- **Vector Storage**: Uses Redis with HNSW indexing for fast semantic similarity search\n",

+ "- **User Memory System**: Maintains preferences, goals, and history for each user\n",

+ "- **Three-Tier Response Strategy**:\n",

+ " - **Cache Miss**: Generate fresh response using GPT-4o (comprehensive but expensive)\n",

+ " - **Cache Hit (No Context)**: Return cached response instantly (fast and free)\n",

+ " - **Cache Hit (With Context)**: Personalize cached response using GPT-4o-mini (fast and cheap)\n",

+ "\n",

+ "### Key Methods:\n",

+ "- `add_user_memory()`: Store user context (preferences, goals, history)\n",

+ "- `search_cache()`: Find semantically similar cached responses using vector search\n",

+ "- `store_response()`: Save new responses with TTL and vector embeddings\n",

+ "- `query()`: Main entry point that determines cache hit/miss and response strategy\n",

+ "\n",

+ "### Performance Benefits:\n",

+ "- **Speed**: Cache hits respond in <100ms vs 2-5 seconds for fresh generation\n",

+ "- **Cost**: 60-80% savings on repeat queries through caching and model optimization\n",

+ "- **Relevance**: Personalized responses feel tailored to each user's context and expertise"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 9,

+ "metadata": {

+ "id": "6APF2GQaE3fm"

+ },

+ "outputs": [],

+ "source": [

+ "from redisvl.query import VectorQuery\n",

+ "\n",

+ "class ContextEnabledSemanticCache:\n",

+ " def __init__(self, redis_index, vectorizer, llm_client: \"LLMClient\", telemetry: \"TelemetryLogger\", cache_ttl: int = -1):\n",

+ " self.index = redis_index\n",

+ " self.vectorizer = vectorizer\n",

+ " self.llm = llm_client\n",

+ " self.telemetry = telemetry\n",

+ " self.user_memories: Dict[str, Dict] = {}\n",

+ " self.cache_ttl = cache_ttl # seconds, -1 for no expiry\n",

+ "\n",

+ " def add_user_memory(self, user_id: str, memory_type: str, content: str):\n",

+ " if user_id not in self.user_memories:\n",

+ " self.user_memories[user_id] = {\"preferences\": [], \"history\": [], \"goals\": []}\n",

+ " self.user_memories[user_id][memory_type].append(content)\n",

+ "\n",

+ " def get_user_memory(self, user_id: str) -> Dict:\n",

+ " return self.user_memories.get(user_id, {})\n",

+ "\n",

+ " def generate_embedding(self, text: str) -> List[float]:\n",

+ " # Disable progress bar for cleaner output\n",

+ " return self.vectorizer.embed(text, show_progress_bar=False)\n",

+ "\n",

+ "\n",

+ " def search_cache(\n",

+ " self,\n",

+ " embedding: List[float],\n",

+ " distance_threshold: float = 0.2, # Loosened for consistency\n",

+ " ):\n",

+ " \"\"\"\n",

+ " Find the best cached match and gate it by a distance threshold.\n",

+ " The score returned by RediSearch (HNSW + cosine) is a distance (lower is better).\n",

+ " We accept a hit if distance <= distance_threshold.\n",

+ " \"\"\"\n",

+ " return_fields = [\"content\", \"user_id\", \"prompt\", \"model\", \"created_at\"]\n",

+ " query = VectorQuery(\n",

+ " vector=embedding,\n",

+ " vector_field_name=\"content_vector\",\n",

+ " return_fields=return_fields,\n",

+ " num_results=1,\n",

+ " return_score=True,\n",

+ " )\n",

+ " results = self.index.query(query)\n",

+ "\n",

+ " if results:\n",

+ " first = results[0]\n",

+ " # Use 'vector_distance' which is the standard score field in redisvl\n",

+ " score = first.get(\"vector_distance\", None)\n",

+ " if score is not None and float(score) <= distance_threshold:\n",

+ " return {field: first[field] for field in return_fields}\n",

+ "\n",

+ " return None\n",

+ "\n",

+ " def store_response(self, prompt: str, response: str, embedding: List[float], user_id: str, model: str):\n",

+ " import numpy as np\n",

+ " vec_bytes = np.array(embedding, dtype=np.float32).tobytes()\n",

+ "\n",

+ " doc = {\n",

+ " \"content\": response,\n",

+ " \"content_vector\": vec_bytes,\n",

+ " \"user_id\": user_id,\n",

+ " \"prompt\": prompt,\n",

+ " \"model\": model,\n",

+ " \"created_at\": int(time.time())\n",

+ " }\n",

+ " \n",

+ " # Use a unique key for each entry and set TTL\n",

+ " key = f\"{self.index.prefix}:{uuid.uuid4()}\"\n",

+ " self.index.load([doc], keys=[key])\n",

+ " \n",

+ " if self.cache_ttl > 0:\n",

+ " # We need a direct redis-py client to set TTL on the hash key\n",

+ " redis_client = self.index.client\n",

+ " redis_client.expire(key, self.cache_ttl)\n",

+ "\n",

+ "\n",

+ " def query(self, prompt: str, user_id: str):\n",

+ " start_time = time.time()\n",

+ " embedding = self.generate_embedding(prompt)\n",

+ " cached_result = self.search_cache(embedding)\n",

+ "\n",

+ " if cached_result:\n",

+ " cached_response = cached_result[\"content\"]\n",

+ " user_context = self.get_user_memory(user_id)\n",

+ " if user_context:\n",

+ " result = self.llm.personalize_response(cached_response, user_context, prompt)\n",

+ " self.telemetry.log(\n",

+ " user_id=user_id,\n",

+ " method=\"context_query\",\n",

+ " latency_ms=result[\"latency_ms\"],\n",

+ " input_tokens=result[\"input_tokens\"],\n",

+ " output_tokens=result[\"output_tokens\"],\n",

+ " cache_status=\"hit_personalized\",\n",

+ " response_source=result[\"model\"]\n",

+ " )\n",

+ " return result[\"response\"]\n",

+ " else:\n",

+ " # Measure actual cache hit latency (embedding + Redis query time)\n",

+ " cache_latency = (time.time() - start_time) * 1000\n",

+ " self.telemetry.log(\n",

+ " user_id=user_id,\n",

+ " method=\"context_query\",\n",

+ " latency_ms=round(cache_latency, 2),\n",

+ " input_tokens=0,\n",

+ " output_tokens=0,\n",

+ " cache_status=\"hit_raw\",\n",

+ " response_source=\"cache\"\n",

+ " )\n",

+ " return cached_response\n",

+ "\n",

+ " else:\n",

+ " result = self.llm.call_llm(prompt)\n",

+ " self.store_response(prompt, result[\"response\"], embedding, user_id, result[\"model\"])\n",

+ " self.telemetry.log(\n",

+ " user_id=user_id,\n",

+ " method=\"context_query\",\n",

+ " latency_ms=result[\"latency_ms\"],\n",

+ " input_tokens=result[\"input_tokens\"],\n",

+ " output_tokens=result[\"output_tokens\"],\n",

+ " cache_status=\"miss\",\n",

+ " response_source=result[\"model\"]\n",

+ " )\n",

+ " return result[\"response\"]\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "RgmW_S6s9Sy_"

+ },

+ "source": [

+ "## Scenario Setup: IT Support Dashboard Access\n",

+ "\n",

+ "We'll simulate three different approaches to handling the same IT support query:\n",

+ "- **User A (Cold)**: No cache, fresh LLM call every time\n",

+ "- **User B (No Context)**: Cache hit, but generic response \n",

+ "- **User C (With Context)**: Cache hit + personalization based on user memory\n",

+ "\n",

+ "The query: *A user in the finance department can't access the dashboard — what should I check?*\n",

+ "\n",

+ "### User Context Profile\n",

+ "User C represents an experienced IT support agent who:\n",

+ "- Specializes in finance department issues\n",

+ "- Has solved similar dashboard access problems before\n",

+ "- Uses specific tools and follows established troubleshooting patterns\n",

+ "- Needs responses tailored to their expertise level and current context"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 10,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "zji4u12fgQZg",

+ "outputId": "cfc5cc09-381c-4d6e-8c43-0dcd98760edd"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "\n",

+ "============================================================\n",

+ "🧊 Scenario 1: Plain LLM – cache miss\n",

+ "============================================================\n",

+ "First, ensure the user has the correct permissions or roles assigned to access the dashboard. Next, verify if there are connectivity issues, incorrect login credentials, or if the dashboard tool is experiencing outages. If everything seems fine, check if their account is active and not locked or expired.\n",

+ "\n",

+ "============================================================\n",

+ "📦 Scenario 2: Semantic Cache Hit – generic, extremely fast, no user memory\n",

+ "============================================================\n",

+ "First, ensure the user has the correct permissions or roles assigned to access the dashboard. Next, verify if there are connectivity issues, incorrect login credentials, or if the dashboard tool is experiencing outages. If everything seems fine, check if their account is active and not locked or expired.\n",

+ "\n",

+ "============================================================\n",

+ "🧠 Scenario 3: Context-Enabled Semantic Cache Hit – personalized with user memory\n",

+ "============================================================\n",

+ "First, check if the user’s 'finance_dashboard_viewer' role is correctly configured to grant access to the dashboard. Since you know that SSO setups can sometimes be tricky, ensure there are no login issues and that the necessary permissions are intact. Lastly, verify that their account is active and not locked, especially after recent troubleshooting efforts.\n",

+ "\n"

+ ]

+ }

+ ],

+ "source": [

+ "from IPython.display import clear_output, display, Markdown\n",

+ "clear_output(wait=True)\n",

+ "\n",

+ "# 🔁 Reset Redis index and telemetry (optional for rerun clarity)\n",

+ "search_index.delete()\n",

+ "search_index.create(overwrite=True)\n",

+ "\n",

+ "# Initialize telemetry and engine\n",

+ "telemetry_logger = TelemetryLogger()\n",

+ "cesc = ContextEnabledSemanticCache(\n",

+ " redis_index=search_index,\n",

+ " vectorizer=vectorizer,\n",

+ " llm_client=LLMClient(client, token_counter, MODEL_GPT4, MODEL_GPT4_MINI),\n",

+ " telemetry=telemetry_logger,\n",

+ " cache_ttl=3600 # Expire cache entries after 1 hour\n",

+ ")\n",

+ "\n",

+ "def get_divider(title: str = \"\", width: int = 60) -> str:\n",

+ " line = \"=\" * width\n",

+ " if title:\n",

+ " return f\"\\n{line}\\n{title}\\n{line}\\n\"\n",

+ " else:\n",

+ " return f\"\\n{line}\\n\"\n",

+ "\n",

+ "# 🧪 Define demo prompt and users\n",

+ "prompt = \"A user in the finance department can't access the dashboard — what should I check? Answer in 2-3 sentences max.\"\n",

+ "users = {\n",

+ " \"cold\": \"user_cold\",\n",

+ " \"nocx\": \"user_nocontext\",\n",

+ " \"cx\": \"user_withcontext\"\n",

+ "}\n",

+ "\n",

+ "# 🧠 Add memory for personalized user (e.g., HR IT support agent)\n",

+ "cesc.add_user_memory(users[\"cx\"], \"preferences\", \"uses Chrome browser on macOS\")\n",

+ "cesc.add_user_memory(users[\"cx\"], \"goals\", \"resolve access issues efficiently for finance team users\")\n",

+ "cesc.add_user_memory(users[\"cx\"], \"history\", \"frequently resolves issues with 'finance_dashboard_viewer' role misconfigurations\")\n",

+ "cesc.add_user_memory(users[\"cx\"], \"history\", \"troubleshot recent problems with finance dashboard access and SSO\")\n",

+ "\n",

+ "# 🔍 Run prompt for each scenario and collect output\n",

+ "output_parts = []\n",

+ "\n",

+ "output_parts.append(get_divider(\"🧊 Scenario 1: Plain LLM – cache miss\"))\n",

+ "response_1 = cesc.query(prompt, user_id=users[\"cold\"])\n",

+ "output_parts.append(response_1 + \"\\n\")\n",

+ "\n",

+ "output_parts.append(get_divider(\"📦 Scenario 2: Semantic Cache Hit – generic, extremely fast, no user memory\"))\n",

+ "response_2 = cesc.query(prompt, user_id=users[\"nocx\"])\n",

+ "output_parts.append(response_2 + \"\\n\")\n",

+ "\n",

+ "output_parts.append(get_divider(\"🧠 Scenario 3: Context-Enabled Semantic Cache Hit – personalized with user memory\"))\n",

+ "response_3 = cesc.query(prompt, user_id=users[\"cx\"])\n",

+ "output_parts.append(response_3 + \"\\n\")\n",

+ "\n",

+ "# Print all collected output at once\n",

+ "print(\"\".join(output_parts))\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "gJ-fUMmY9X4V"

+ },

+ "source": [

+ "## Key Observations\n",

+ "\n",

+ "Notice the different response patterns:\n",

+ "\n",

+ "1. **Cold Start Response**: Comprehensive but generic, took longest time and highest cost\n",

+ "2. **Cache Hit Response**: Identical to cold start, near-instant retrieval, minimal cost\n",

+ "3. **Personalized Response**: Adapted for user's specific role, tools, and experience level\n",

+ "\n",

+ "The personalized response demonstrates how CESC can:\n",

+ "- Reference user's specific browser/OS (Chrome on macOS)\n",

+ "- Mention role-specific permissions (finance_dashboard_viewer role)\n",

+ "- Reference past experience (SSO troubleshooting history)\n",

+ "- Maintain professional tone appropriate for experienced IT staff"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 11,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 600

+ },

+ "id": "zJdBei1UkQHO",

+ "outputId": "6df548bd-ec88-41b7-bf61-295e57d0cfbb"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "\n",

+ "============================================================\n",

+ "📈 Telemetry Summary:\n",

+ "============================================================\n",

+ "\n"

+ ]

+ },

+ {

+ "data": {

+ "text/html": [

+ "

"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "4i9pSolc896M"

+ },

+ "source": [

+ "## What is Context-Enabled Semantic Caching?\n",

+ "\n",

+ "\n",

+ "Most caching systems today are **exact match**. They only return results if the query matches a key 1:1. \n",

+ "Ask **“What’s the weather in NYC?”**, and the system might cache and return that exact string. \n",

+ "But change it slightly—**“Is it raining in New York?”**—and you miss the cache completely.\n",

+ "\n",

+ "**Semantic caching** fixes that. It uses **vector embeddings** to find conceptually similar queries. \n",

+ "So whether a user asks “forecast for NYC,” “weather in Manhattan,” or “umbrella needed in NYC?”, they all hit the **same cached result** if the meaning aligns.\n",

+ "\n",

+ "But here’s the problem: \n",

+ "Even if you nail semantic similarity, **not all users want the same level of detail or format**. \n",

+ "With LLMs storing more history and memory on users, this is a chance to tailor responses to be fully personalized at fractions of the cost.\n",

+ "\n",

+ "That’s where **Context-Enabled Semantic Caching (CESC)** comes in.\n",

+ "\n",

+ "---\n",

+ "\n",

+ "\n",

+ "\n",

+ "### The Business Problem\n",

+ "\n",

+ "Enterprise LLM applications face three critical challenges:\n",

+ "- **Cost**: GPT-4o calls can cost $0.0025-0.01 per 1K tokens\n",

+ "- **Latency**: Cold LLM calls take 2-5 seconds, hurting user experience \n",

+ "- **Relevance**: Generic responses don't account for user roles, preferences, or context\n",

+ "\n",

+ "### Why It Matters\n",

+ "\n",

+ "| Challenge | Traditional Caching | Semantic Caching | CESC (Personalized) |\n",

+ "|----------------|-----------------------------|----------------------------------------|-------------------------------------------|\n",

+ "| **Match Type** | Exact string | Vector similarity | Vector + user context |\n",

+ "| **Relevance** | Low | Medium | High |\n",

+ "| **Latency** | Fast | Fast | Still fast (cached + lightweight model) |\n",

+ "| **Cost** | Low | Low | Low (personalization avoids full GPT-4o-mini) |\n",

+ "\n",

+ "\n",

+ "\n",

+ "---\n",

+ "\n",

+ "### Our Solution Architecture\n",

+ "\n",

+ "CESC creates a three-tier response system:\n",

+ "1. **Cold Start**: Fresh LLM call for new queries (expensive, slow, but comprehensive)\n",

+ "2. **Cache Hit**: Instant return of semantically similar cached responses (fast, cheap, generic)\n",

+ "3. **Personalized Cache Hit**: Lightweight model personalizes cached content using user memory (balanced speed/cost/relevance)\n",

+ "\n",

+ "Let's see this in action with a real enterprise IT support scenario.\n",

+ "[](https://mermaid.live/edit#pako:eNpdkU1uwjAQha9izTpQfkyAqEJCqdQNlSBpWTRh4SYDiRTbaOKUAkLqFXrFnqROgmjVWdnz5n1-8pwh0SmCB9tCH5JMkGGLIFbM1ip6KZHYqkI6blinM2NhtMbEaGIhCkqy-ze6mwWY5uV6sWk9oZ1jSjMpTJI1nkX0uHz-_vzimvmiKFqQH4UWgyxXtplkeHX7jRhEAZqKFDOa1Qn-on-583qKcnxHNlfl4TY2vyao6uwSpaZjS_0j_9eWt4wdmaucLZFKrUSRn7DNG4ADO8pT8LaiKNEBiSRFfYdzzY3BZCgxBs8eU9yKqjAxxOpifXuhXrWW4BmqrJN0tctunGqfCoMPudiRkLcuoUqRfF0pAx7vTxsIeGf4AG867Lp8POmNXT4YuLYcOILXd6ddPhzzSd8d8Snn3L04cGqe7XUn45EDdk32y5_aZTc7v_wAqpSdUg)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Install dependencies"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 1,

+ "metadata": {

+ "id": "v6g7eVRZAcFA"

+ },

+ "outputs": [],

+ "source": [

+ "# 📦 Install required Python packages\n",

+ "!pip install -q \"redisvl>=0.8.0\" sentence-transformers openai tiktoken python-dotenv redis google pandas"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Run a Redis instance\n",

+ "\n",

+ "\n",

+ "#### For Colab\n",

+ "Use the shell script below to download, extract, and install [Redis Stack](https://redis.io/docs/getting-started/install-stack/) directly from the Redis package archive."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "m04KxSuhBiOx"

+ },

+ "outputs": [],

+ "source": [

+ "# NBVAL_SKIP\n",

+ "%%sh\n",

+ "curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg\n",

+ "echo \"deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main\" | sudo tee /etc/apt/sources.list.d/redis.list\n",

+ "sudo apt-get update > /dev/null 2>&1\n",

+ "sudo apt-get install redis-stack-server > /dev/null 2>&1\n",

+ "redis-stack-server --daemonize yes"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### For Alternative Environments\n",

+ "There are many ways to get the necessary redis-stack instance running\n",

+ "1. On cloud, deploy a [FREE instance of Redis in the cloud](https://redis.com/try-free/). Or, if you have your\n",

+ "own version of Redis Enterprise running, that works too!\n",

+ "2. Per OS, [see the docs](https://redis.io/docs/latest/operate/oss_and_stack/install/install-stack/)\n",

+ "3. With docker: `docker run -d --name redis-stack-server -p 6379:6379 redis/redis-stack-server:latest`"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "xlsHkIF49Lve"

+ },

+ "source": [

+ "## Infrastructure Setup\n",

+ "\n",

+ "We're using Redis with vector search capabilities to store embeddings and enable semantic similarity matching. This simulates a production environment where your cache would be persistent across sessions.\n",

+ "\n",

+ "**Note**: In production, you'd typically use Redis Enterprise, or a managed Redis service such as Redis Cloud or Azure Managed Redis with proper clustering, persistence, and security configurations."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 2,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "we-6LpNAByt1",

+ "outputId": "89b7e9c1-63f9-4458-cdab-0bc98b88a09e"

+ },

+ "outputs": [

+ {

+ "data": {

+ "text/plain": [

+ "True"

+ ]

+ },

+ "execution_count": 2,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

+ "source": [

+ "import os\n",

+ "import redis\n",

+ "\n",

+ "# Redis connection params\n",

+ "REDIS_HOST = os.getenv(\"REDIS_HOST\", \"localhost\")\n",

+ "REDIS_PORT = os.getenv(\"REDIS_PORT\", \"6379\")\n",

+ "REDIS_PASSWORD = os.getenv(\"REDIS_PASSWORD\", \"\")\n",

+ "\n",

+ "#\n",

+ "# Create Redis client\n",

+ "redis_client = redis.Redis(\n",

+ " host=REDIS_HOST,\n",

+ " port=REDIS_PORT,\n",

+ " password=REDIS_PASSWORD\n",

+ ")\n",

+ "\n",

+ "redis_url = f\"redis://:{REDIS_PASSWORD}@{REDIS_HOST}:{REDIS_PORT}\" if REDIS_PASSWORD else f\"redis://{REDIS_HOST}:{REDIS_PORT}\"\n",

+ "\n",

+ "# Test connection\n",

+ "redis_client.ping()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Essential Imports\n",

+ "\n",

+ "This cell imports all the key libraries needed for Context-Enabled Semantic Caching:\n",

+ "\n",

+ "**Core AI & ML:**\n",

+ "- `sentence_transformers` - For generating text embeddings using the all-MiniLM-L6-v2 model\n",

+ "- `openai` - Client libraries for both OpenAI and Azure OpenAI APIs\n",

+ "- `tiktoken` - Accurate token counting for cost calculation\n",

+ "\n",

+ "**Redis & Vector Search:**\n",

+ "- `redis` - Direct Redis client for database operations\n",

+ "- `redisvl` - Redis Vector Library for semantic search capabilities\n",

+ "- `SearchIndex` - Vector search index management\n",

+ "- `HFTextVectorizer` - Hugging Face text vectorization utilities\n",

+ "\n",

+ "**Data & Utilities:**\n",

+ "- `pandas` - Data analysis and telemetry reporting\n",

+ "- `numpy` - Numerical operations for vector handling\n",

+ "- `typing` - Type hints for better code clarity\n",

+ "- `dotenv` - Environment variable management for API keys"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 3,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stderr",

+ "output_type": "stream",

+ "text": [

+ "c:\\Users\\PhilipLaussermair\\Desktop\\Code\\Internal\\sc recipe\\redis-ai-resources\\.venv\\Lib\\site-packages\\tqdm\\auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html\n",

+ " from .autonotebook import tqdm as notebook_tqdm\n"

+ ]

+ },

+ {

+ "data": {

+ "text/plain": [

+ "True"

+ ]

+ },

+ "execution_count": 3,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

+ "source": [

+ "import os\n",

+ "import time\n",

+ "import uuid\n",

+ "import numpy as np\n",

+ "from typing import List, Dict\n",

+ "import redis\n",

+ "from sentence_transformers import SentenceTransformer\n",

+ "from redisvl.index import SearchIndex\n",

+ "from redisvl.utils.vectorize import HFTextVectorizer\n",

+ "from openai import AzureOpenAI\n",

+ "import tiktoken\n",

+ "import pandas as pd\n",

+ "from openai import AzureOpenAI, OpenAI\n",

+ "import logging\n",

+ "import sys\n",

+ "\n",

+ "from dotenv import load_dotenv\n",

+ "\n",

+ "# Load environment variables from .env file\n",

+ "# Make sure you have a .env file in the root of this project\n",

+ "\n",

+ "\n",

+ "# Suppress noisy loggers\n",

+ "logging.getLogger(\"sentence_transformers\").setLevel(logging.WARNING)\n",

+ "logging.getLogger(\"httpx\").setLevel(logging.WARNING)\n",

+ "load_dotenv()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## LLM Client Setup\n",

+ "\n",

+ "This section handles the detection and initialization of our LLM client. We support both OpenAI and Azure OpenAI with automatic detection based on available environment variables:\n",

+ "\n",

+ "- **Priority 1**: OpenAI (if `OPENAI_API_KEY` is present)\n",

+ "- **Priority 2**: Azure OpenAI (if `AZURE_OPENAI_API_KEY` + `AZURE_OPENAI_ENDPOINT` are present) \n",

+ "- **Fallback**: Exit with clear instructions if no credentials found"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 4,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "🔒 Azure OpenAI detected\n"

+ ]

+ }

+ ],

+ "source": [

+ "# Helper function to get secrets from Colab or environment variables\n",

+ "def get_secret(secret_name: str) -> str:\n",

+ " \"\"\"\n",

+ " Retrieves a secret from Google Colab's userdata if available,\n",

+ " otherwise falls back to an environment variable.\n",

+ " \"\"\"\n",

+ " try:\n",

+ " from google.colab import userdata\n",

+ " secret = userdata.get(secret_name)\n",

+ " if secret:\n",

+ " return secret\n",

+ " except (ImportError, KeyError):\n",

+ " # Not in Colab or secret not found, fall back to environment variables\n",

+ " pass\n",

+ " return os.getenv(secret_name)\n",

+ "\n",

+ "# 🔐 Simple API key detection and client setup\n",

+ "if get_secret(\"OPENAI_API_KEY\"):\n",

+ " print(\"🔒 OpenAI detected\")\n",

+ " client = OpenAI(api_key=get_secret(\"OPENAI_API_KEY\"))\n",

+ " MODEL_GPT4 = \"gpt-4o\"\n",

+ " MODEL_GPT4_MINI = \"gpt-4o-mini\"\n",

+ "elif get_secret(\"AZURE_OPENAI_API_KEY\") and get_secret(\"AZURE_OPENAI_ENDPOINT\"):\n",

+ " print(\"🔒 Azure OpenAI detected\")\n",

+ " client = AzureOpenAI(\n",

+ " azure_endpoint=get_secret(\"AZURE_OPENAI_ENDPOINT\"),\n",

+ " api_key=get_secret(\"AZURE_OPENAI_API_KEY\"),\n",

+ " api_version=get_secret(\"AZURE_OPENAI_API_VERSION\") or \"2024-05-01-preview\"\n",

+ " )\n",

+ " MODEL_GPT4 = os.getenv(\"AZURE_OPENAI_MODEL_GPT4\", \"gpt-4o\")\n",

+ " MODEL_GPT4_MINI = os.getenv(\"AZURE_OPENAI_MODEL_GPT4_MINI\", \"gpt-4o-mini\")\n",

+ "else:\n",

+ " print(\"❌ No API keys found!\")\n",

+ " print(\"Set one of the following environment variables:\")\n",

+ " print(\" OpenAI: OPENAI_API_KEY\")\n",

+ " print(\" Azure OpenAI: AZURE_OPENAI_API_KEY + AZURE_OPENAI_ENDPOINT\")\n",

+ " sys.exit(1)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Redis Vector Search Index Setup\n",

+ "\n",

+ "We're setting up a Redis search index optimized for semantic caching with vector similarity search:\n",

+ "\n",

+ "**Index Configuration:**\n",

+ "- **Algorithm**: HNSW (Hierarchical Navigable Small World) for fast approximate nearest neighbor search\n",

+ "- **Distance Metric**: Cosine similarity for semantic text comparison\n",

+ "- **Vector Dimensions**: 384 (matching our sentence-transformer model)\n",

+ "- **Storage**: Hash-based for efficient retrieval\n",

+ "\n",

+ "**Fields Stored:**\n",

+ "- `content_vector`: The 384-dimensional embedding of the cached response\n",

+ "- `content`: The original text response from the LLM\n",

+ "- `user_id`: Which user generated this cache entry\n",

+ "- `prompt`: The original query that generated this response\n",

+ "- `model`: Which LLM model was used (gpt-4o vs gpt-4o-mini)\n",

+ "- `created_at`: Timestamp for cache expiration and analytics\n",

+ "\n",

+ "This setup enables sub-millisecond similarity searches across thousands of cached responses."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 5,

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "12:16:59 redisvl.index.index INFO Index already exists, overwriting.\n"

+ ]

+ }

+ ],

+ "source": [

+ "# RedisVL index configuration\n",

+ "index_config = {\n",

+ " \"index\": {\n",

+ " \"name\": \"cesc_index\",\n",

+ " \"prefix\": \"cesc\",\n",

+ " \"storage_type\": \"hash\"\n",

+ " },\n",

+ " \"fields\": [\n",

+ " {\n",

+ " \"name\": \"content_vector\",\n",

+ " \"type\": \"vector\",\n",

+ " \"attrs\": {\n",

+ " \"dims\": 384,\n",

+ " \"distance_metric\": \"cosine\",\n",

+ " \"algorithm\": \"hnsw\"\n",

+ " }\n",

+ " },\n",

+ " {\"name\": \"content\", \"type\": \"text\"},\n",

+ " {\"name\": \"user_id\", \"type\": \"tag\"},\n",

+ " {\"name\": \"prompt\", \"type\": \"text\"},\n",

+ " {\"name\": \"model\", \"type\": \"tag\"},\n",

+ " {\"name\": \"created_at\", \"type\": \"numeric\"},\n",

+ " ]\n",

+ "}\n",

+ "\n",

+ "# Create and connect the search index\n",

+ "search_index = SearchIndex.from_dict(index_config)\n",

+ "search_index.connect(redis_url)\n",

+ "search_index.create(overwrite=True)\n",

+ "\n",

+ "# Initialize embedding model and vectorizer for semantic search\n",

+ "embedding_model = SentenceTransformer(\"all-MiniLM-L6-v2\")\n",

+ "vectorizer = HFTextVectorizer(model=\"all-MiniLM-L6-v2\")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Telemetry and Token Counting\n",

+ "\n",

+ "These utilities help us measure and analyze the performance benefits of our caching system:\n",

+ "\n",

+ "**TokenCounter:**\n",

+ "- Accurately counts input/output tokens for cost calculation\n",

+ "- Uses tiktoken library with model-specific encodings\n",

+ "- Essential for measuring cost savings vs. baseline GPT-4o calls\n",

+ "\n",

+ "**TelemetryLogger:**\n",

+ "- Tracks latency, token usage, and costs for each query\n",

+ "- Categorizes responses: `miss` (cold LLM call), `hit_raw` (cache), `hit_personalized` (cache + customization)\n",

+ "- Calculates cost savings compared to always using GPT-4o\n",

+ "- Provides detailed analytics tables and summaries\n",

+ "\n",

+ "This data demonstrates the ROI of Context-Enabled Semantic Caching in real-world scenarios."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 7,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Token counter for accurate cost calculation\n",

+ "class TokenCounter:\n",

+ " def __init__(self, model_name=\"gpt-4o\"):\n",

+ " try:\n",

+ " self.encoding = tiktoken.encoding_for_model(model_name)\n",

+ " except KeyError:\n",

+ " self.encoding = tiktoken.get_encoding(\"cl100k_base\")\n",

+ "\n",

+ " def count_tokens(self, text: str) -> int:\n",

+ " if not text:\n",

+ " return 0\n",

+ " return len(self.encoding.encode(text))\n",

+ "\n",

+ "token_counter = TokenCounter()\n",

+ "\n",

+ "class TelemetryLogger:\n",

+ " def __init__(self):\n",

+ " self.logs = []\n",

+ "\n",

+ " def log(self, user_id, method, latency_ms, input_tokens, output_tokens, cache_status, response_source):\n",

+ " model = response_source # assume model name is passed as source, e.g., \"gpt-4o\" or \"gpt-4o-mini\"\n",

+ " cost = self.calculate_cost(model, input_tokens, output_tokens)\n",

+ " self.logs.append({\n",

+ " \"timestamp\": time.time(),\n",

+ " \"user_id\": user_id,\n",

+ " \"method\": method,\n",

+ " \"latency_ms\": latency_ms,\n",

+ " \"input_tokens\": input_tokens,\n",

+ " \"output_tokens\": output_tokens,\n",

+ " \"total_tokens\": input_tokens + output_tokens,\n",

+ " \"cache_status\": cache_status,\n",

+ " \"response_source\": response_source,\n",

+ " \"cost_usd\": cost\n",

+ " })\n",

+ "\n",

+ " # 💵 Real cost vs baseline cold-call cost\n",

+ " cost = self.calculate_cost(response_source, input_tokens, output_tokens)\n",

+ " baseline = self.calculate_cost(\"gpt-4o\", input_tokens, output_tokens)\n",

+ "\n",

+ " self.logs[-1][\"cost_usd\"] = cost\n",

+ " self.logs[-1][\"baseline_cost_usd\"] = baseline\n",

+ "\n",

+ " def show_logs(self):\n",

+ " return pd.DataFrame(self.logs)\n",

+ "\n",

+ " def summarize(self):\n",

+ " df = pd.DataFrame(self.logs)\n",

+ " if df.empty:\n",

+ " print(\"No telemetry yet.\")\n",

+ " return\n",

+ "\n",

+ " df[\"total_tokens\"] = df[\"input_tokens\"] + df[\"output_tokens\"]\n",

+ "\n",

+ " display(df[[\n",

+ " \"user_id\",\n",

+ " \"cache_status\",\n",

+ " \"latency_ms\",\n",

+ " \"response_source\",\n",

+ " \"input_tokens\",\n",

+ " \"output_tokens\",\n",

+ " \"total_tokens\"\n",

+ " ]])\n",

+ "\n",

+ " # Compare cold start vs personalized\n",

+ " try:\n",

+ " cold_latency = df.loc[df[\"user_id\"] == \"user_cold\", \"latency_ms\"].values[0]\n",

+ " cx_latency = df.loc[df[\"user_id\"] == \"user_withcontext\", \"latency_ms\"].values[0]\n",

+ "\n",

+ " if cx_latency < cold_latency:\n",

+ " delta = cold_latency - cx_latency\n",

+ " pct = (delta / cold_latency) * 100\n",

+ " print(f\"\\n⚡ Personalized response (user_withcontext) was faster than the plain LLM by {int(delta)} ms — a {pct:.1f}% speed boost.\")\n",

+ " else:\n",

+ " delta = cx_latency - cold_latency\n",

+ " pct = (delta / cx_latency) * 100\n",

+ " print(f\"\\n⏱️ Personalized response (user_withcontext) was {int(delta)} ms slower than the plain LLM — a {pct:.1f}% slowdown.\")\n",

+ " print(\"📌 However, it returned a tailored response based on user memory, offering higher relevance.\")\n",

+ " except Exception as e:\n",

+ " print(\"\\n⚠️ Could not compute latency comparison:\", e)\n",

+ "\n",

+ " def calculate_cost(self, model: str, input_tokens: int, output_tokens: int) -> float:\n",

+ " # Azure OpenAI pricing (per 1K tokens)\n",

+ " pricing = {\n",

+ " \"gpt-4o\": {\"input\": 0.005, \"output\": 0.015},\n",

+ " \"gpt-4o-mini\": {\"input\": 0.0015, \"output\": 0.003}\n",

+ " }\n",

+ "\n",

+ " if model not in pricing:\n",

+ " return 0.0\n",

+ "\n",

+ " input_cost = (input_tokens / 1000) * pricing[model][\"input\"]\n",

+ " output_cost = (output_tokens / 1000) * pricing[model][\"output\"]\n",

+ " return round(input_cost + output_cost, 6)\n",

+ "\n",

+ " def display_cost_summary(self):\n",

+ " df = self.show_logs()\n",

+ " if df.empty:\n",

+ " print(\"No telemetry logged yet.\")\n",

+ " return\n",

+ "\n",

+ " # Calculate savings per row\n",

+ " df[\"savings_usd\"] = df[\"baseline_cost_usd\"] - df[\"cost_usd\"]\n",

+ "\n",

+ " total_cost = df[\"cost_usd\"].sum()\n",

+ " baseline_cost = df[\"baseline_cost_usd\"].sum()\n",

+ " total_savings = df[\"savings_usd\"].sum()\n",

+ " savings_pct = (total_savings / baseline_cost * 100) if baseline_cost > 0 else 0\n",

+ "\n",

+ " # Display summary table\n",

+ " display(df[[\n",

+ " \"user_id\", \"cache_status\", \"response_source\",\n",

+ " \"input_tokens\", \"output_tokens\", \"latency_ms\",\n",

+ " \"cost_usd\", \"baseline_cost_usd\", \"savings_usd\"\n",

+ " ]])\n",

+ "\n",

+ " # 💸 Compare cost of plain LLM vs personalized\n",

+ " try:\n",

+ " cost_plain = df.loc[df[\"user_id\"] == \"user_cold\", \"cost_usd\"].values[0]\n",

+ " cost_personalized = df.loc[df[\"user_id\"] == \"user_withcontext\", \"cost_usd\"].values[0]\n",

+ "\n",

+ " print(f\"\\n🧾 Total Cost of Plain LLM Response: ${cost_plain:.4f}\")\n",

+ " print(f\"🧾 Total Cost of Personalized Response: ${cost_personalized:.4f}\")\n",

+ "\n",

+ " if cost_personalized < cost_plain:\n",

+ " delta = cost_plain - cost_personalized\n",

+ " pct = (delta / cost_plain) * 100\n",

+ " print(f\"\\n💡 Personalized response (user_withcontext) was cheaper than plain LLM by ${delta:.4f} — a {pct:.1f}% cost improvement.\")\n",

+ " else:\n",

+ " delta = cost_personalized - cost_plain\n",

+ " pct = (delta / cost_personalized) * 100\n",

+ " print(f\"\\n⏱️ Personalized response (user_withcontext) was ${delta:.4f} more expensive than plain LLM — a {pct:.1f}% cost increase.\")\n",

+ " print(\"📌 However, it returned a tailored response based on user memory, offering higher relevance.\")\n",

+ " except Exception as e:\n",

+ " print(\"\\n⚠️ Could not compute cost comparison:\", e)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## LLM Client: The Intelligence Engine\n",

+ "\n",

+ "The `LLMClient` class serves as our interface to LLM services, handling both fresh content generation and response personalization:\n",

+ "\n",

+ "### Key Components:\n",

+ "- **Dual Model Strategy**: Uses GPT-4o for comprehensive responses and GPT-4o-mini for efficient personalization\n",

+ "- **Token Counting**: Tracks usage for accurate cost calculation and telemetry\n",

+ "- **Response Personalization**: Adapts cached responses using user context and memory\n",

+ "- **Performance Monitoring**: Measures latency and token consumption for each operation\n",

+ "\n",

+ "### Personalization Process:\n",

+ "When a cache hit occurs for a user with stored context, the system:\n",

+ "1. Takes the cached response as a baseline\n",

+ "2. Incorporates user-specific preferences, goals, and history\n",

+ "3. Generates a personalized variant using the lightweight GPT-4o-mini model\n",

+ "4. Maintains the core information while adapting tone and specific recommendations"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 8,

+ "metadata": {

+ "id": "i3LSCGr3E1t8"

+ },

+ "outputs": [],

+ "source": [

+ "class LLMClient:\n",

+ " def __init__(self, client, token_counter, gpt4_model=\"gpt-4o\", gpt4mini_model=\"gpt-4o-mini\"):\n",

+ " self.client = client\n",

+ " self.token_counter = token_counter\n",

+ " self.gpt4_model = gpt4_model\n",

+ " self.gpt4mini_model = gpt4mini_model\n",

+ "\n",

+ " def call_llm(self, prompt: str, model: str = \"gpt-4o\") -> Dict:\n",

+ " \"\"\"Call LLM model and track latency, token usage, and cost\"\"\"\n",

+ " start_time = time.time()\n",

+ " response = self.client.chat.completions.create(\n",

+ " model=model,\n",

+ " messages=[{\"role\": \"user\", \"content\": prompt}],\n",

+ " temperature=0.7,\n",

+ " max_tokens=200\n",

+ " )\n",

+ " latency = (time.time() - start_time) * 1000\n",

+ "\n",

+ " output = response.choices[0].message.content\n",

+ " input_tokens = self.token_counter.count_tokens(prompt)\n",

+ " output_tokens = self.token_counter.count_tokens(output)\n",

+ "\n",

+ " return {\n",

+ " \"response\": output,\n",

+ " \"latency_ms\": round(latency, 2),\n",

+ " \"input_tokens\": input_tokens,\n",

+ " \"output_tokens\": output_tokens,\n",

+ " \"model\": model\n",

+ " }\n",

+ "\n",

+ " def call_gpt4(self, prompt: str) -> Dict:\n",

+ " return self.call_llm(prompt, model=self.gpt4_model)\n",

+ "\n",

+ " def call_gpt4mini(self, prompt: str) -> Dict:\n",

+ " return self.call_llm(prompt, model=self.gpt4mini_model)\n",

+ "\n",

+ " def personalize_response(self, cached_response: str, user_context: Dict, original_prompt: str) -> Dict:\n",

+ " context_prompt = self._build_context_prompt(cached_response, user_context, original_prompt)\n",

+ " start_time = time.time()\n",

+ " response = self.client.chat.completions.create(\n",

+ " model=self.gpt4mini_model,\n",

+ " messages=[\n",

+ " {\"role\": \"system\", \"content\": context_prompt},\n",

+ " {\"role\": \"user\", \"content\": \"Please personalize this cached response for the user. Keep your response under 3 sentences.\"}\n",

+ " ]\n",

+ " )\n",

+ " latency = (time.time() - start_time) * 1000 # ms\n",

+ " reply = response.choices[0].message.content\n",

+ "\n",

+ " input_tokens = response.usage.prompt_tokens\n",

+ " output_tokens = response.usage.completion_tokens\n",

+ " total_tokens = response.usage.total_tokens\n",

+ "\n",

+ " return {\n",

+ " \"response\": reply,\n",

+ " \"latency_ms\": round(latency, 2),\n",

+ " \"input_tokens\": input_tokens,\n",

+ " \"output_tokens\": output_tokens,\n",

+ " \"tokens\": total_tokens,\n",

+ " \"model\": self.gpt4mini_model\n",

+ " }\n",

+ "\n",

+ " def _build_context_prompt(self, cached_response: str, user_context: Dict, prompt: str) -> str:\n",

+ " context_parts = []\n",

+ " if user_context.get(\"preferences\"):\n",

+ " context_parts.append(\"User preferences: \" + \", \".join(user_context[\"preferences\"]))\n",

+ " if user_context.get(\"goals\"):\n",

+ " context_parts.append(\"User goals: \" + \", \".join(user_context[\"goals\"]))\n",

+ " if user_context.get(\"history\"):\n",

+ " context_parts.append(\"User history: \" + \", \".join(user_context[\"history\"]))\n",

+ " context_blob = \"\\n\".join(context_parts)\n",

+ " return f\"\"\"You are a personalization assistant. A cached response was previously generated for the prompt: \"{prompt}\".\n",

+ "\n",

+ "Here is the cached response:\n",

+ "\\\"\\\"\\\"{cached_response}\\\"\\\"\\\"\n",

+ "\n",

+ "Use the user's context below to personalize and refine the response:\n",

+ "{context_blob}\n",

+ "\n",

+ "Respond in a way that feels tailored to this user, adjusting tone, content, or suggestions as needed. Keep your response under 3 sentences no matter what.\n",

+ "\"\"\""

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Context-Enabled Semantic Cache: The Core Engine\n",

+ "\n",

+ "The `ContextEnabledSemanticCache` class orchestrates the entire caching and personalization workflow:\n",

+ "\n",

+ "### Architecture Overview:\n",

+ "- **Vector Storage**: Uses Redis with HNSW indexing for fast semantic similarity search\n",

+ "- **User Memory System**: Maintains preferences, goals, and history for each user\n",

+ "- **Three-Tier Response Strategy**:\n",

+ " - **Cache Miss**: Generate fresh response using GPT-4o (comprehensive but expensive)\n",

+ " - **Cache Hit (No Context)**: Return cached response instantly (fast and free)\n",

+ " - **Cache Hit (With Context)**: Personalize cached response using GPT-4o-mini (fast and cheap)\n",

+ "\n",

+ "### Key Methods:\n",

+ "- `add_user_memory()`: Store user context (preferences, goals, history)\n",

+ "- `search_cache()`: Find semantically similar cached responses using vector search\n",

+ "- `store_response()`: Save new responses with TTL and vector embeddings\n",

+ "- `query()`: Main entry point that determines cache hit/miss and response strategy\n",

+ "\n",

+ "### Performance Benefits:\n",

+ "- **Speed**: Cache hits respond in <100ms vs 2-5 seconds for fresh generation\n",

+ "- **Cost**: 60-80% savings on repeat queries through caching and model optimization\n",

+ "- **Relevance**: Personalized responses feel tailored to each user's context and expertise"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 9,

+ "metadata": {

+ "id": "6APF2GQaE3fm"

+ },

+ "outputs": [],

+ "source": [

+ "from redisvl.query import VectorQuery\n",

+ "\n",

+ "class ContextEnabledSemanticCache:\n",

+ " def __init__(self, redis_index, vectorizer, llm_client: \"LLMClient\", telemetry: \"TelemetryLogger\", cache_ttl: int = -1):\n",

+ " self.index = redis_index\n",

+ " self.vectorizer = vectorizer\n",

+ " self.llm = llm_client\n",

+ " self.telemetry = telemetry\n",

+ " self.user_memories: Dict[str, Dict] = {}\n",

+ " self.cache_ttl = cache_ttl # seconds, -1 for no expiry\n",

+ "\n",

+ " def add_user_memory(self, user_id: str, memory_type: str, content: str):\n",

+ " if user_id not in self.user_memories:\n",

+ " self.user_memories[user_id] = {\"preferences\": [], \"history\": [], \"goals\": []}\n",

+ " self.user_memories[user_id][memory_type].append(content)\n",

+ "\n",

+ " def get_user_memory(self, user_id: str) -> Dict:\n",

+ " return self.user_memories.get(user_id, {})\n",

+ "\n",

+ " def generate_embedding(self, text: str) -> List[float]:\n",

+ " # Disable progress bar for cleaner output\n",

+ " return self.vectorizer.embed(text, show_progress_bar=False)\n",

+ "\n",

+ "\n",

+ " def search_cache(\n",

+ " self,\n",

+ " embedding: List[float],\n",

+ " distance_threshold: float = 0.2, # Loosened for consistency\n",

+ " ):\n",

+ " \"\"\"\n",

+ " Find the best cached match and gate it by a distance threshold.\n",

+ " The score returned by RediSearch (HNSW + cosine) is a distance (lower is better).\n",

+ " We accept a hit if distance <= distance_threshold.\n",

+ " \"\"\"\n",

+ " return_fields = [\"content\", \"user_id\", \"prompt\", \"model\", \"created_at\"]\n",

+ " query = VectorQuery(\n",

+ " vector=embedding,\n",

+ " vector_field_name=\"content_vector\",\n",

+ " return_fields=return_fields,\n",

+ " num_results=1,\n",

+ " return_score=True,\n",

+ " )\n",

+ " results = self.index.query(query)\n",

+ "\n",

+ " if results:\n",

+ " first = results[0]\n",

+ " # Use 'vector_distance' which is the standard score field in redisvl\n",

+ " score = first.get(\"vector_distance\", None)\n",

+ " if score is not None and float(score) <= distance_threshold:\n",

+ " return {field: first[field] for field in return_fields}\n",

+ "\n",

+ " return None\n",

+ "\n",