-

Notifications

You must be signed in to change notification settings - Fork 1

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Merge branch 'newdocs' of https://github.com/JuliaConstraints/JuliaCo…

…nstraints.github.io into newdocs

- Loading branch information

Showing

5 changed files

with

122 additions

and

5 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,7 @@ | ||

| # API | ||

|

|

||

| Here's the API for PerfChecker.jl | ||

|

|

||

| ```@autodocs | ||

| Modules=[PerfChecker] | ||

| ``` |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -30,4 +30,3 @@ Options specific to this backend with their default values are defined as: | |

| :samples => nothing | ||

| :gc => true | ||

| ``` | ||

|

|

||

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,7 +1,48 @@ | ||

| # PerfChecker.jl | ||

|

|

||

| Documentation for `PerfChecker.jl`. | ||

| PerfChecker.jl is a package designed for package authors to easily performance test their packages. | ||

| To achieve that, it provides the follwing features: | ||

|

|

||

| ```@autodocs | ||

| Modules=[PerfChecker] | ||

| - The main macro `@check`, which provides an easy-to-use interface over various interfaces, configurable for various backends via a dictionary. | ||

| - (WIP) A CI for reproducible performance testing. | ||

| - Visualization of different metrics from `@check` using Makie.jl | ||

|

|

||

| ## Usage | ||

|

|

||

| The primary usage of PerfChecker.jl looks like this: | ||

|

|

||

| ```julia | ||

| using PerfChecker | ||

| # optional using custom backend like BenchmarkTools, Chairmark etc | ||

| config = Dict(:option1 => "value1", :option2 => :value2) | ||

|

|

||

| results = @check :name_of_backend config begin | ||

| # preparatory code goes here | ||

| end begin | ||

| # the code block to be performance tested goes here | ||

| end | ||

|

|

||

| # Visualization of the results | ||

| using Makie | ||

| checkres_to_scatterlines(results) | ||

| ``` | ||

|

|

||

| The config dictionary can take many options, depending on the backend. | ||

|

|

||

| Some of the commonly used options are: | ||

| - `:PATH` => The path where to the default environment of julia when creating a new process. | ||

| - `:pkgs` => A list of versions to test performance for. Its defined as the `Tuple`, `(name::String, option::Symbol, versions::Vector{VersionNumber}, last_or_first::Bool)` Can be given as follows: | ||

| - `name` is the name of the package. | ||

| - `option` is one of the 5 symbols: | ||

| - `:patches`: last patch or first patch of a version | ||

| - `:breaking`: last breaking or next breaking version | ||

| - `:major`: previous or next major version | ||

| - `:minor`: previous or next minor version | ||

| - `:custom`: custom version numbers (provide any boolean value for `last_or_first` in this case as it doesn't matter) | ||

| - `versions`: The input for the provided `option` | ||

| - `last_or_first`: Input for the provided `option` | ||

| - `:tags` => A list of tags (a vector of symbols) to easily tag performance tests. | ||

| - `:devops` => Giving a custom input to `Pkg.develop`. Intended to be used to test performance of a local development branch of a pacakge with previous versions. Often can be used as simply as `:devops => "MyPackageName"` | ||

| - `:threads` => An integer to select the number of threads to start Julia with. | ||

|

|

||

| Checkout the documentation of the other backends for more default options and the default values. |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,68 @@ | ||

| # Tutorial | ||

|

|

||

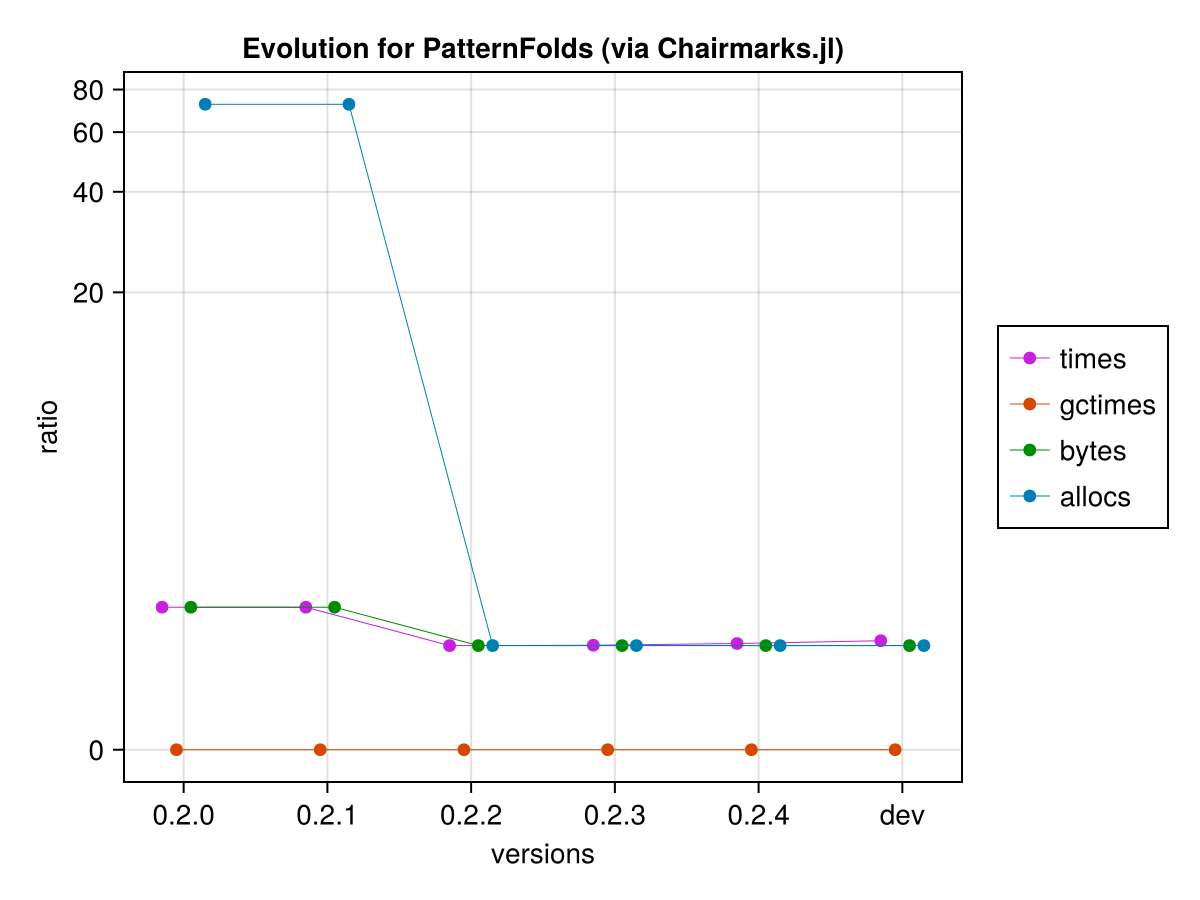

| Taken from PerfChecker.jl examples, this is a guide for performance testing of PatterFolds.jl package using Chairmarks.jl | ||

|

|

||

| Using PerfChecker.jl requires an environment with the dependencies present in it. | ||

|

|

||

| The actual script looks like this: | ||

|

|

||

| ```julia | ||

| using PerfChecker, Chairmarks, CairoMakie | ||

|

|

||

| d = Dict(:path => @__DIR__, :evals => 10, :samples => 1000, | ||

| :seconds => 100, :tags => [:patterns, :intervals], | ||

| :pkgs => ( | ||

| "PatternFolds", :custom, [v"0.2.0", v"0.2.1", v"0.2.2", v"0.2.3", v"0.2.4"], true), | ||

| :devops => "PatternFolds") | ||

|

|

||

| x = @check :chairmark d begin | ||

| using PatternFolds | ||

| end begin | ||

| # Intervals | ||

| itv = Interval{Open, Closed}(0.0, 1.0) | ||

| i = IntervalsFold(itv, 2.0, 1000) | ||

|

|

||

| unfold(i) | ||

| collect(i) | ||

| reverse(collect(i)) | ||

|

|

||

| # Vectors | ||

| vf = make_vector_fold([0, 1], 2, 1000) | ||

|

|

||

| unfold(vf) | ||

| collect(vf) | ||

| reverse(collect(vf)) | ||

|

|

||

| rand(vf, 1000) | ||

|

|

||

| return nothing | ||

| end | ||

|

|

||

| mkpath(joinpath(@__DIR__, "visuals")) | ||

|

|

||

| c = checkres_to_scatterlines(x, Val(:chairmark)) | ||

| save(joinpath(@__DIR__, "visuals", "chair_evolution.png"), c) | ||

|

|

||

| for kwarg in [:times, :gctimes, :bytes, :allocs] | ||

| c2 = checkres_to_boxplots(x, Val(:chairmark); kwarg) | ||

| save(joinpath(@__DIR__, "visuals", "chair_boxplots_$kwarg.png"), c2) | ||

| end | ||

| ``` | ||

|

|

||

| `d` here is the configuration dictionary. | ||

| `x` stores the results from performance testing | ||

|

|

||

| The code below the macro call is for plotting and storing the plots. It creates the visuals folder and stores the following plots in the folder: | ||

|

|

||

| Boxplots from Chairmarks for allocations: | ||

|

|

||

|  | ||

|

|

||

| Boxplots from Chairmarks for times: | ||

|

|

||

|  | ||

|

|

||

| Evolution of different metrics across versions according to Chairmarks: | ||

|

|

||

|  | ||

|

|