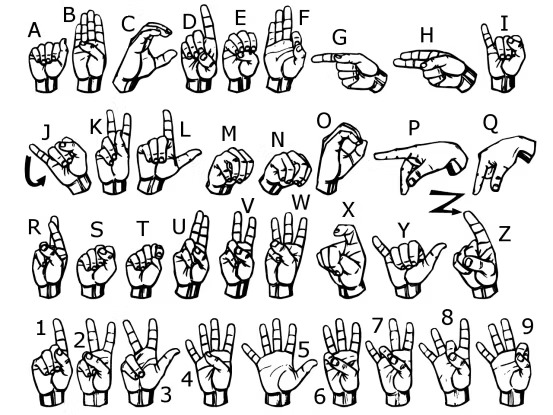

A real-time Sign Language Recognition System made using computer vision and deep learning.

This project captures and classifies hand gesture data (numbers & alphabets) through a CNN model built with TensorFlow/Keras. It utilizes OpenCV and MediaPipe for accurate hand tracking and gesture recognition.

- Python: Core programming language

- OpenCV: For image and video capture & processing

- MediaPipe: Detects hand landmarks/keypoints

- Cvzone: Simplifies integration between OpenCV and MediaPipe

- TensorFlow / Keras: For building and training the CNN model

- Convolutional Neural Network (CNN): Used for gesture classification

Note: This system is designed to work for both right and left-hand signers